This post is about NuExtract 2.0 — check the sister post about the NuExtract platform.

NuExtract is an LLM specialized in extracting structured information from documents: it returns a JSON output from a document and a template (a.k.a. schema). We released NuExtract 1.0 about a year ago, NuExtract 1.5 about 6 months ago, and have been pleased to see their popularity (several million downloads) and performance (similar to GPT-4o while much smaller).

Nevertheless, NuExtract 1.0 and 1.5 have important limitations: they can only perform pure extraction (copy-paste of the input) on text documents. We thus decided to add these 3 key features to NuExtract 2.0:

On top of that, we gave a strong push on the performance side of things. This involved creating datasets of higher quality, changing training procedures, and using better base models:

We are releasing 3 open-source versions of NuExtract 2.0:

These models perform impressively well. However, there was one last thing itching us: what if we go bigger? Would the performance still improve compared to similar-sized models? After all, big models are already pretty good at extraction…

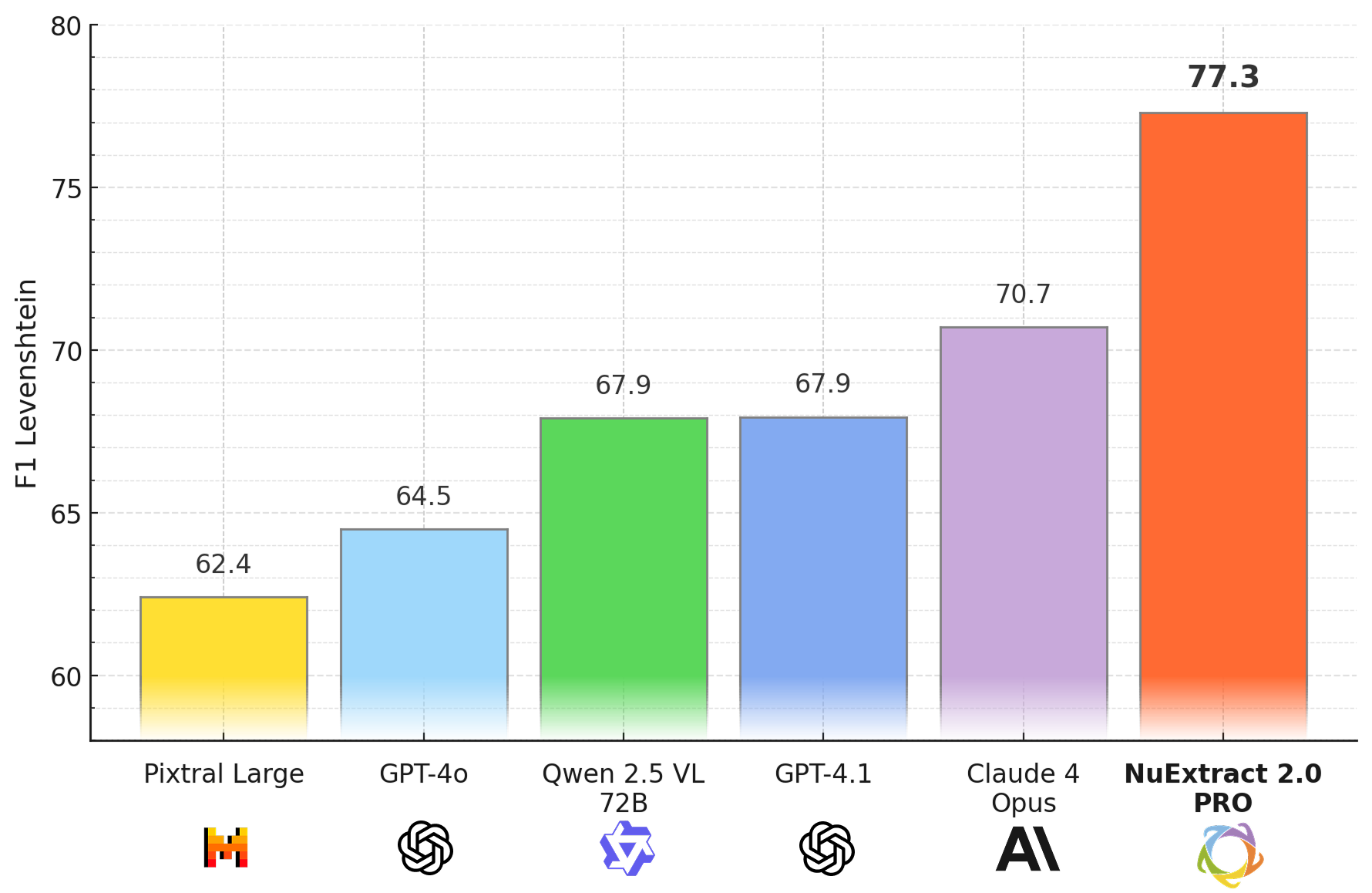

We thus trained a substantially bigger model — NuExtract 2.0 PRO — and were stunned by the results: the model simply outclasses frontier LLMs like GPT-4.1 and Claude 4 Opus!

Driven by these results, we created a platform entirely dedicated to NuExtract 2.0 PRO — nuextract.ai — where you can define extraction tasks and use NuExtract via API. Give it a try!

Overall, it feels like NuExtract is coming out of Beta. We can’t wait to see how it will be used.

Let's now dive into the features and analyze performance in more details.

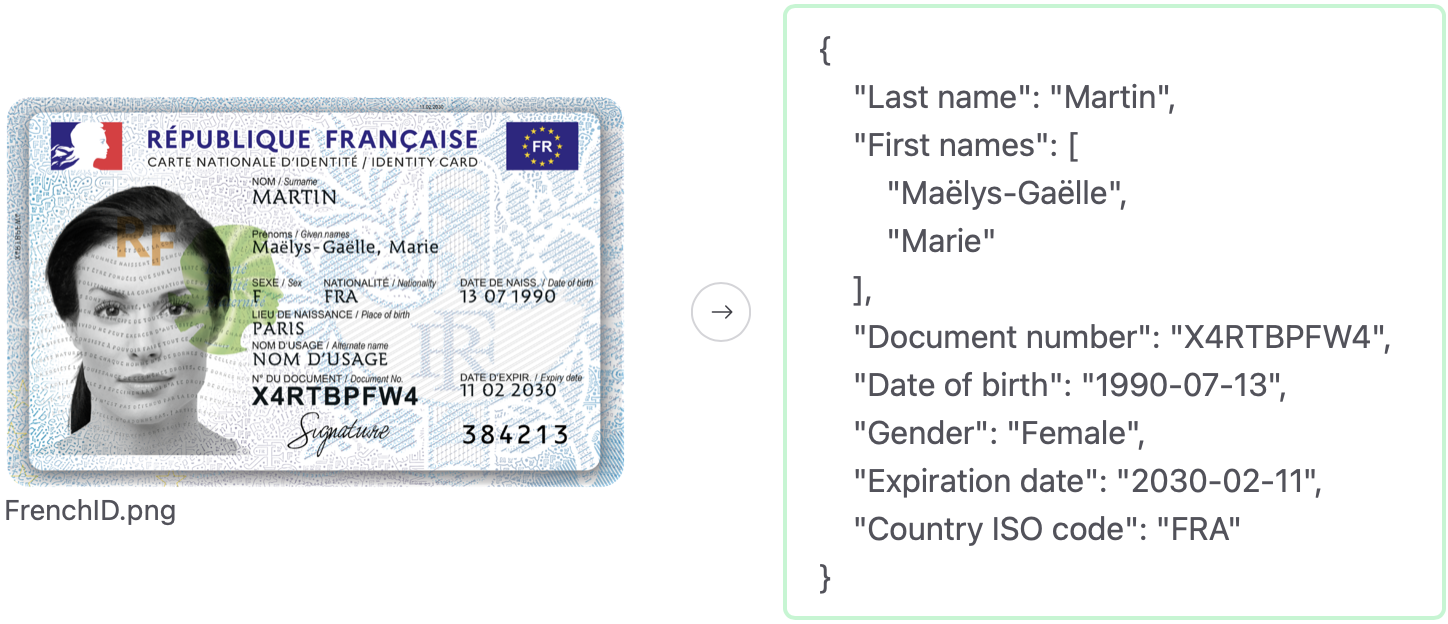

Documents are not just text — they can be formatted, like PDFs or spreadsheets, and they can be scanned documents, like this ID card:

The traditional way to handle such documents is to capture their raw text content, either directly from their file for PDFs, or via an Optical Character Recognition (OCR) step for scanned documents. The problem with such procedure is that it leaves out formatting information: titles are merged with paragraphs, font sizes/types/colors are ignored, tables lose their structures, and, importantly, diagrams and figures are lost.

A recent way to solve this issue is to directly extract from images with a Vision Language Model (VLM). VLMs made a lot of progress recently, to the point that traditional OCR is now largely unnecessary. We thus decided to give NuExtract 2.0 "eyes", and make it a VLM.

We use Qwen 2.5 VL and Qwen 2.0 VL as our base models, which are excellent at handling both text and images. With these multimodal models, you can insert multiple images in the middle of text instructions. Text is tokenized as usual (in words or subwords) and images are tokenized by patches of 28×28 pixels and embedded via a vision module. This unified sequence of token embeddings is then processed by a regular transformer. This is an elegant and flexible way to handle text and images, which has the benefit of allowing in-context learning. Importantly, this architecture does not have a hard limit on image size (only the context size): NuExtract 2.0 can thus process arbitrarily large (or high-resolution) images.

We then teach these base models to extract structured information from images by adding various correctly-extracted PDFs and scanned documents into the training set of NuExtract. As always, data diversity and extraction quality are key here.

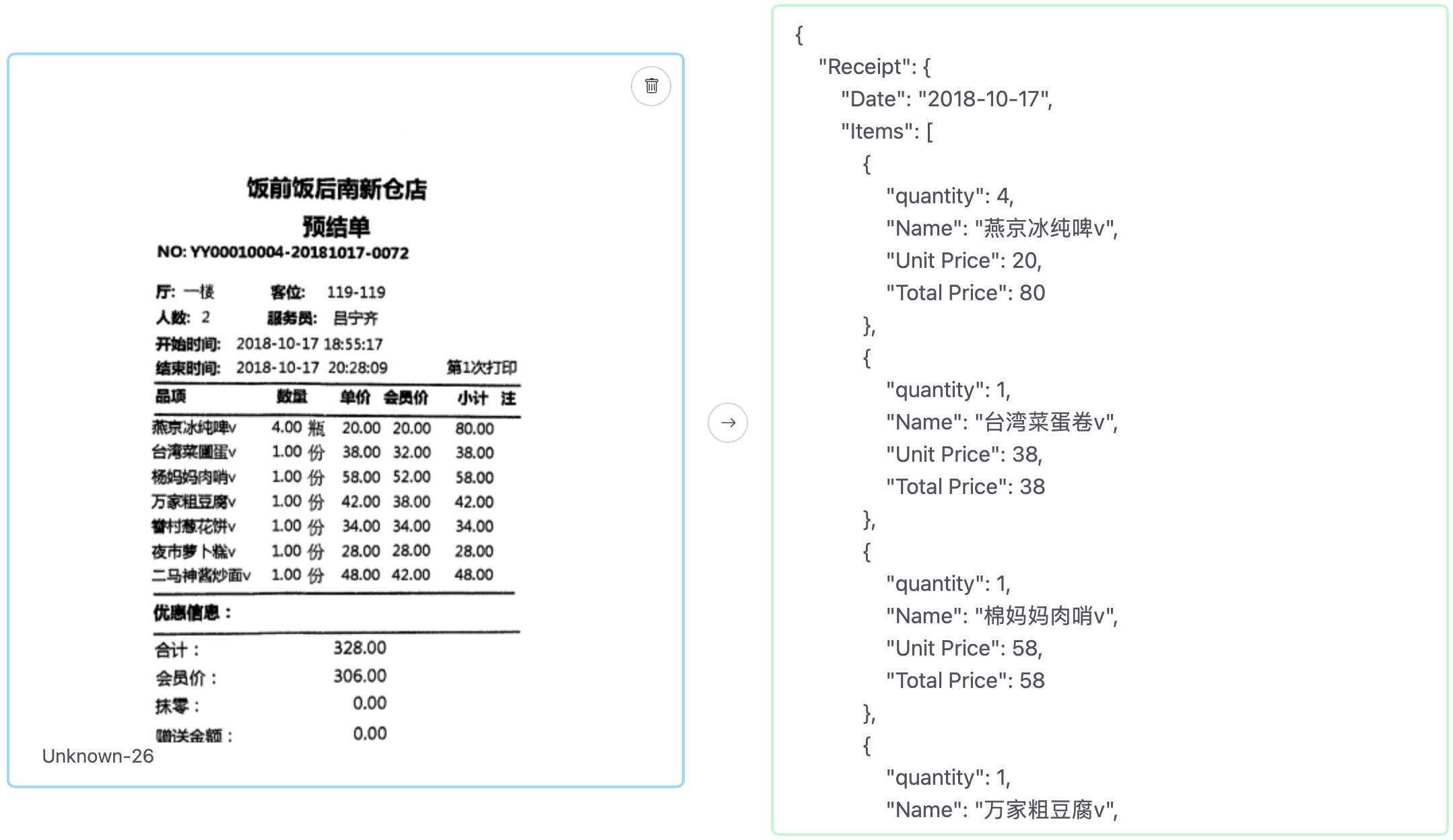

We find that the resulting model can correctly extract information from all kinds of image documents. Here is an example with a scanned receipt, in Chinese:

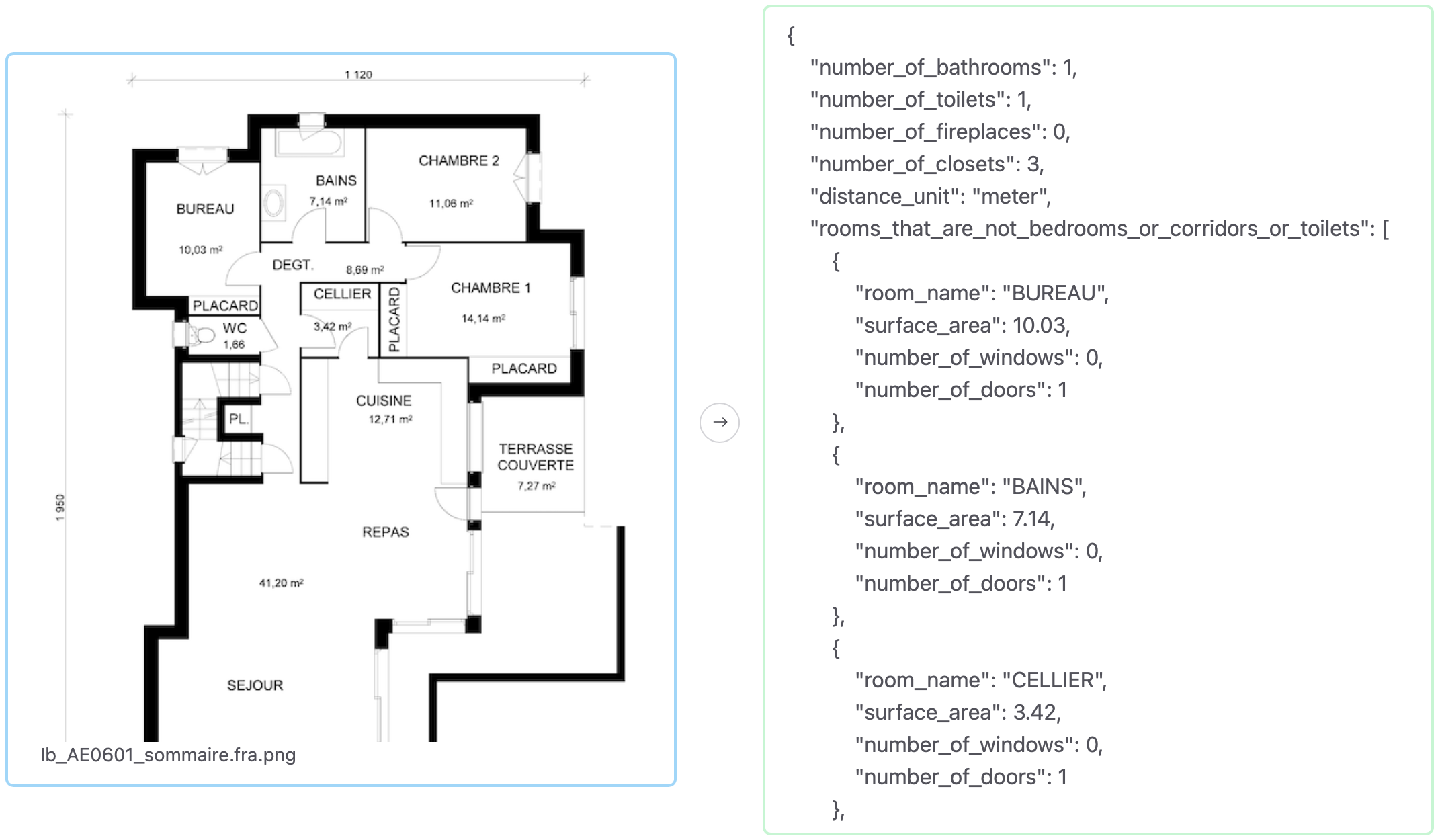

Here is an example with a scanned floor plan:

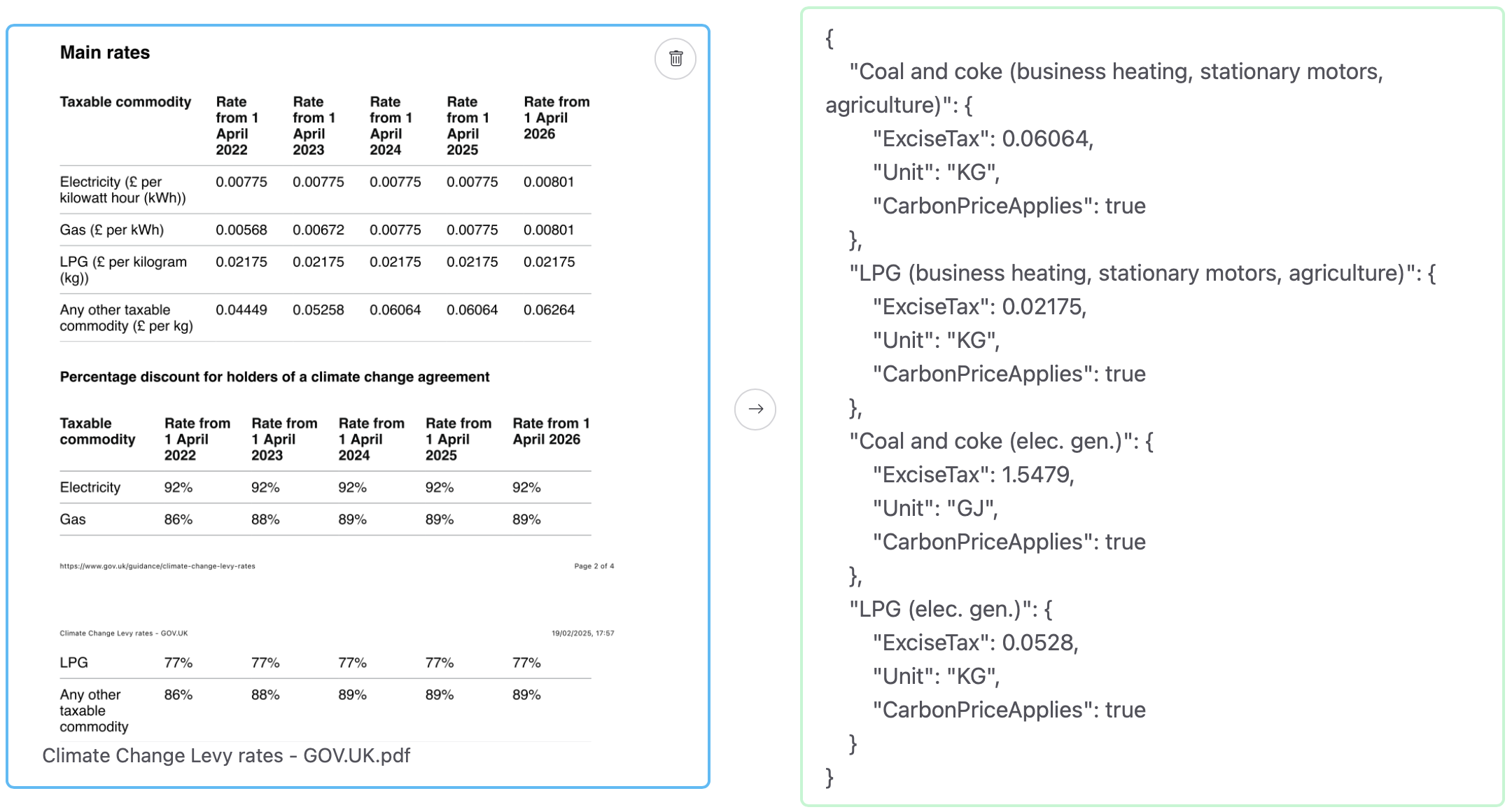

As you can see, the OCR aspect of this model is pretty good. This is confirmed when trying on PDFs converted to images, like in this multi-page example:

The results are often better than extracting from the raw text. However, for "simple" PDFs (e.g., no complex tables or diagrams), we still advise to process from the raw text content.

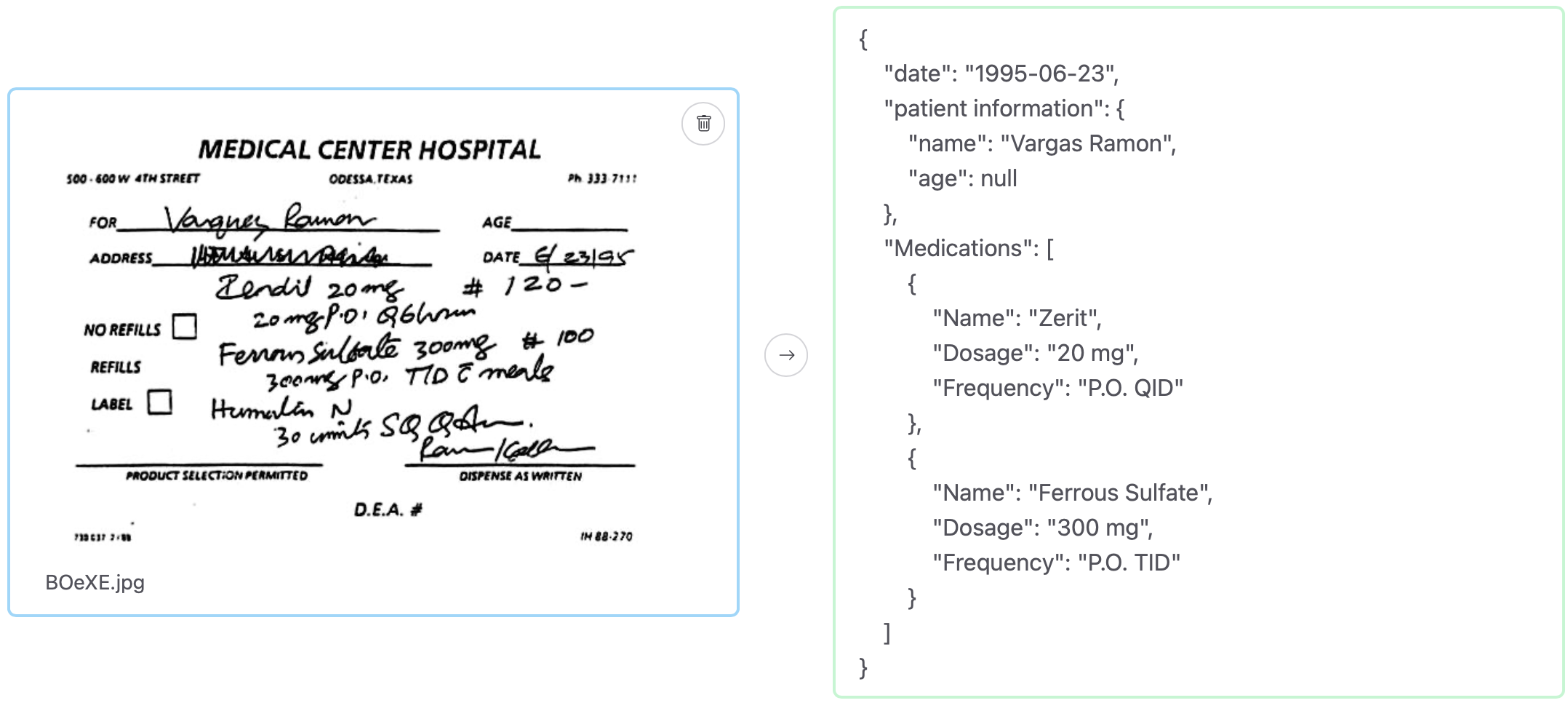

NuExtract 2.0 can also handle handwritten text (typically to parse forms), although it is sometimes challenging, like in the case of this prescription:

For such difficult applications, we find that a fine-tuning step is still required to teach domain-specific knowledge and obtain better performance.

Overall, this transition to a multimodal model is a success. Importantly, we find that it does not affect performance on text documents, and the vision module is relatively small. For these reasons, we only release a multimodal model, and do not plan to release text-only models in the future.

NuExtract 1.0 and 1.5 are pure extractors: they copy-paste text from the input document. Pure extraction is a common use case, but you sometimes need to go beyond it. For example, you might want to:

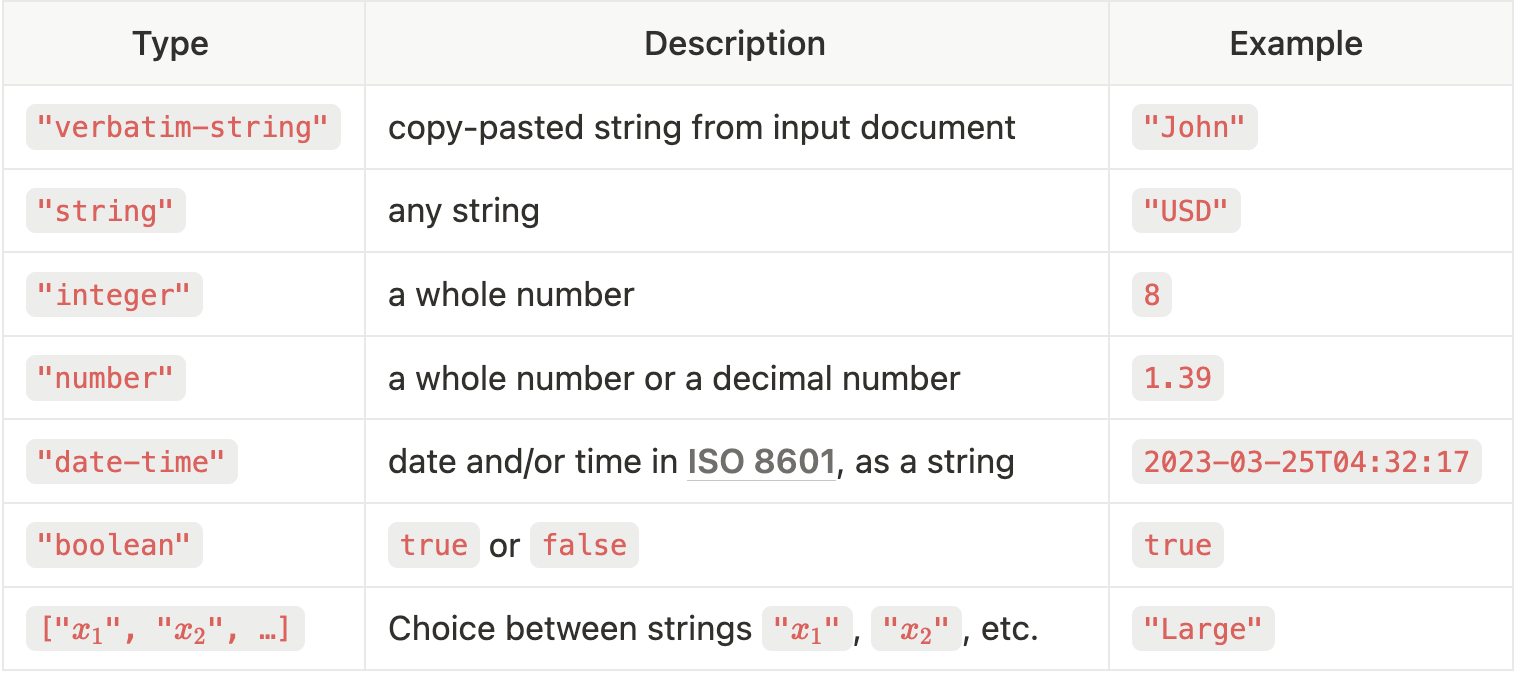

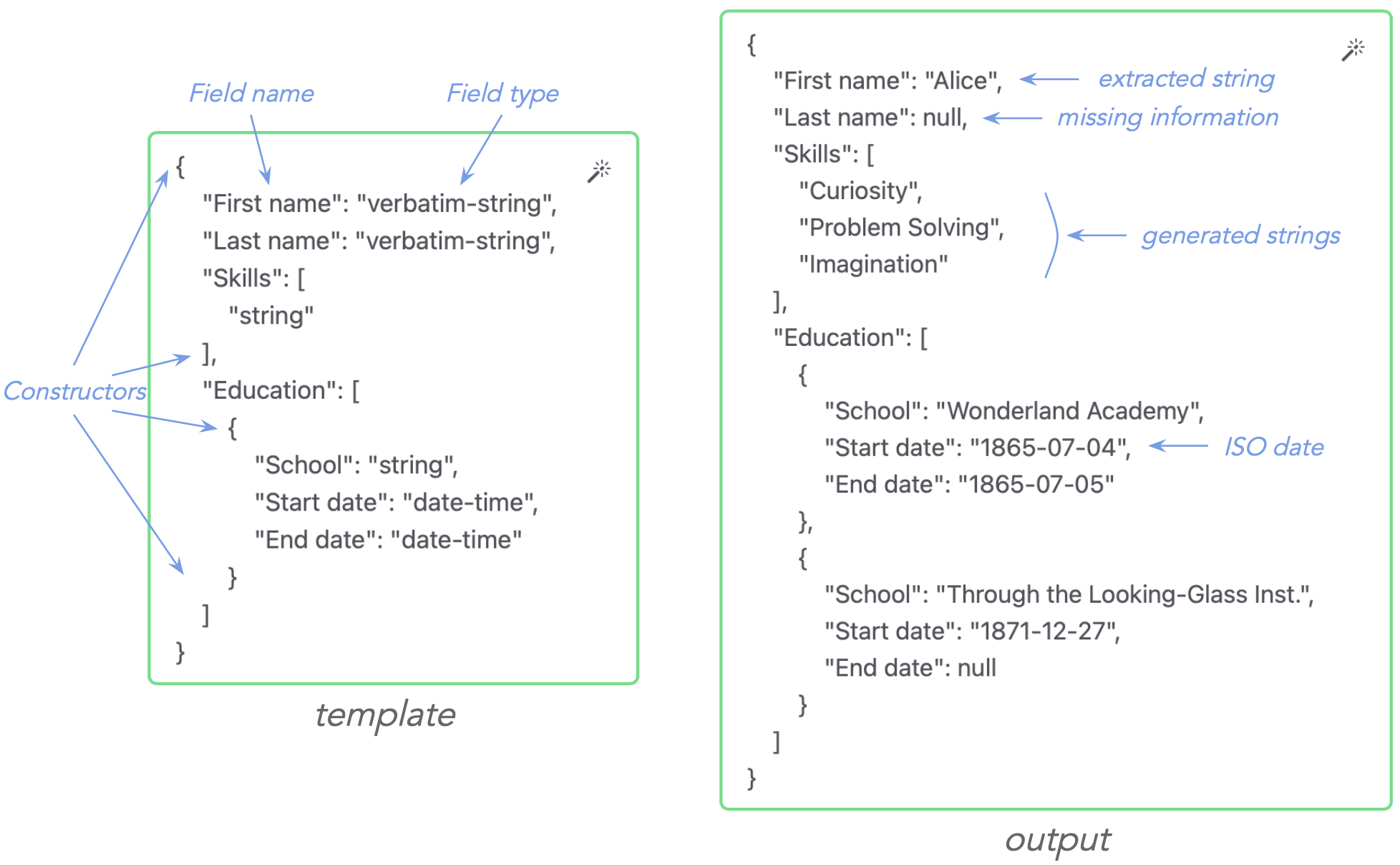

To allow such extractions, we could just remove the pure-extraction constraint from NuExtract. The issue is, this pure extraction constraint is really nice to reduce hallucinations when you know that the answer can only be "verbatim" in the document. To get the best of both worlds, we update the template format of NuExtract to include type specifications. In particular, we include the "verbatim-string" type — unique in its kind — which tells the model to perform a pure extraction, while the classic "string" type allows the model to generate freely. We also included a "choice" type to define possible classes (a.k.a. "enum"), and additional types to handle dates and numbers. Here is the list of possible types:

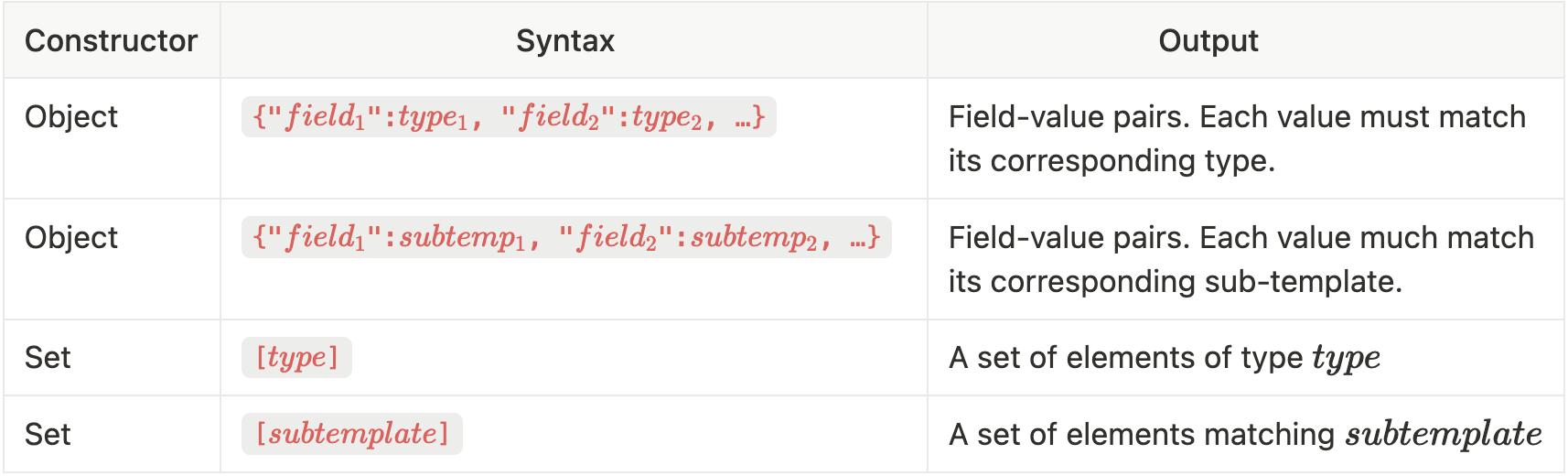

You can combine these types in the template with the same object and set constructors as before:

We also decided to use null to represent missing information instead of empty strings.

Here is an example from this new template format, along with a compatible extraction output:

You can see named fields such as "first name" indicating what to extract, type specifications such as "string"indicating the type/format that the extracted values should have, and the constructors {…} (object) and […] (set) defining the structure that the output should have.

This is a minimalist template format, designed to be easy to read and write, both for humans and LLMs. More importantly, this format allows to be precise about what you want to extract in order to improve performance.

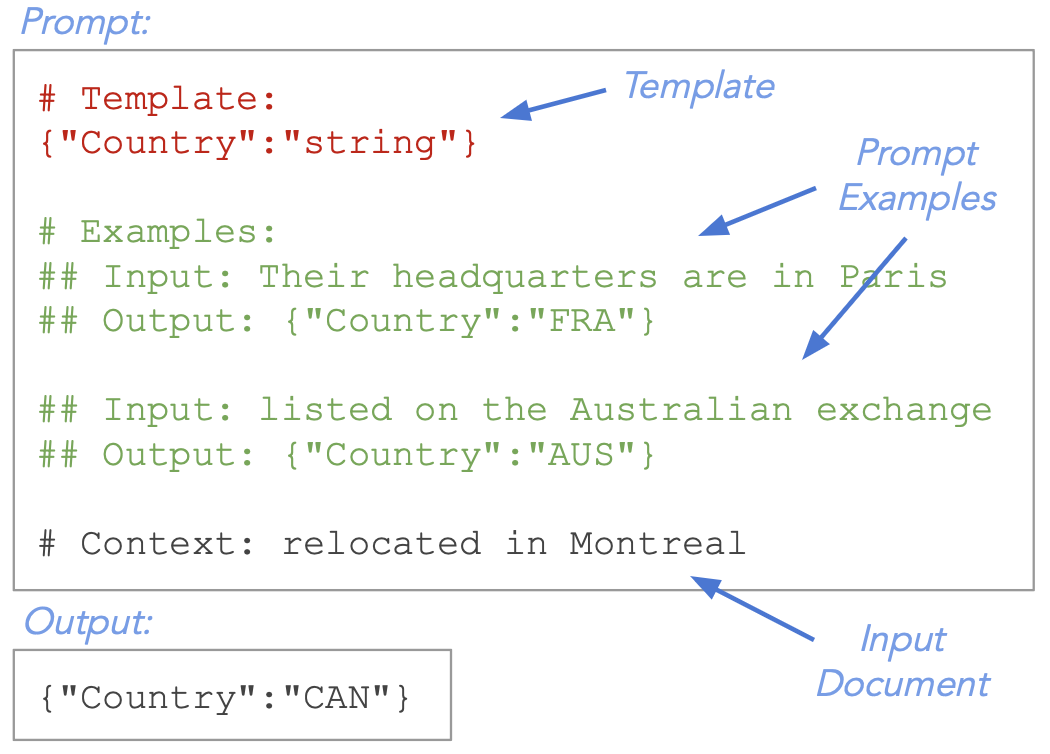

Templates are a great way to define what to extract, but not a perfect one. For example, in the template {"address": "string"}, it is not clear how the address should be formatted. Similarly, in the template {"sentiment": ["positive", "negative", "neutral"]}, "sentiment" can mean different things.

One general way to resolve such ambiguities is to provide examples of correct extraction to the model — a kind of customization. The traditional way to customize a model via examples is to modify its parameters (a.k.a. fine-tuning) in such a way that the model correctly performs the task on the examples given. This deep form of customization is resource-intensive and usually requires many examples.

LLMs have opened the door to another form of customization via examples: you just put these examples in the prompt of the LLM. This is called In-Context Learning (ICL) and is very effective with modern LLMs. The downside of ICL is that you are limited by the total context size of the LLM (which is 32k tokens for NuExtract 2.0), so you generally can only leverage a few of these prompt examples. Still, even a few prompt examples can improve performance substantially.

We taught NuExtract to perform in-context learning. To do so, we added to the training set extractions performed with examples in the prompt, such as:

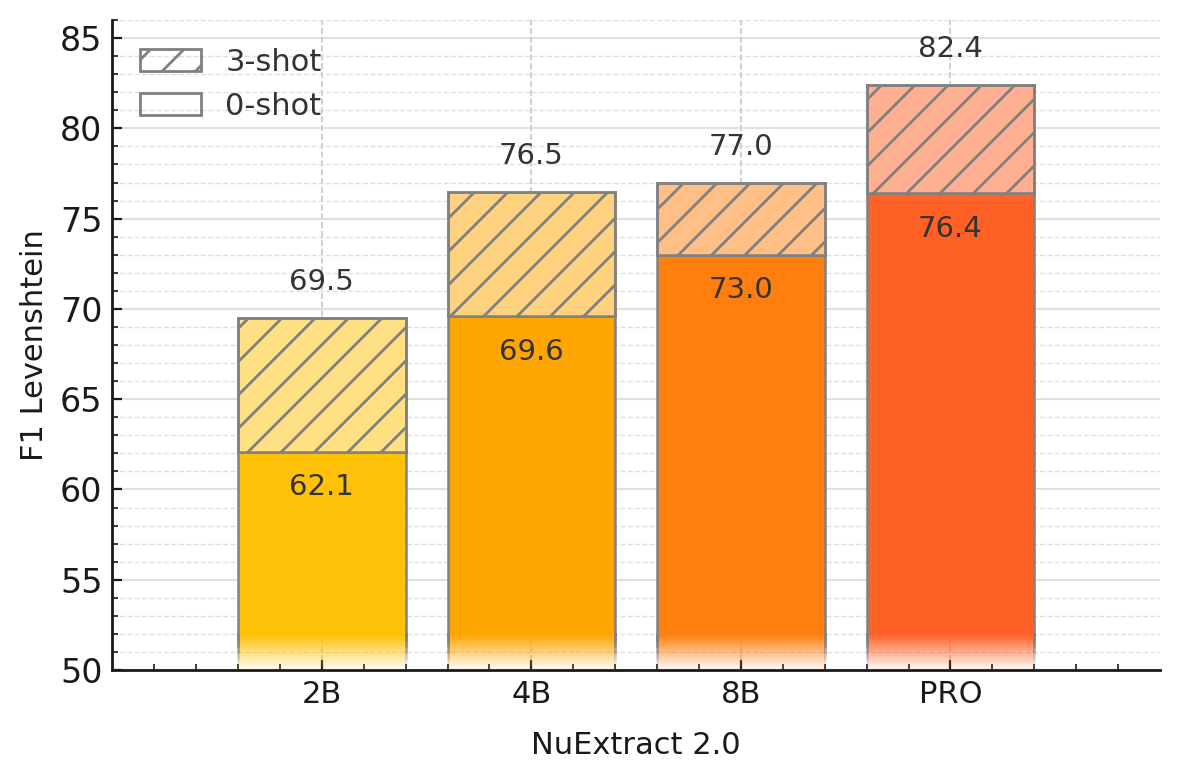

We do this both for text and image documents. Here is the average performance gain that we get when going from no example in the prompt (0-shot) to 3 examples in the prompt (3-shot):

We can see that adding even a few examples can improve extractions substantially. For example, NuExtract 2.0 PRO gains 6 F-score points, which is a lot at this level of performance!

In-context learning is a great way to provide a light but efficient fine-tuning to NuExtract.

Let's now analyze performance of NuExtract in more details. To do so, we use our extraction benchmark composed of 1000+ extraction examples grouped into 21 extraction problems. Documents are text or images, and span multiple languages. We measure performance by averaging similarity scores between predictions and ground truths. We compare NuExtract 2.0 to the best — and carefully prompted — generic models available. We are working on releasing our benchmark, metrics, and protocol publicly.

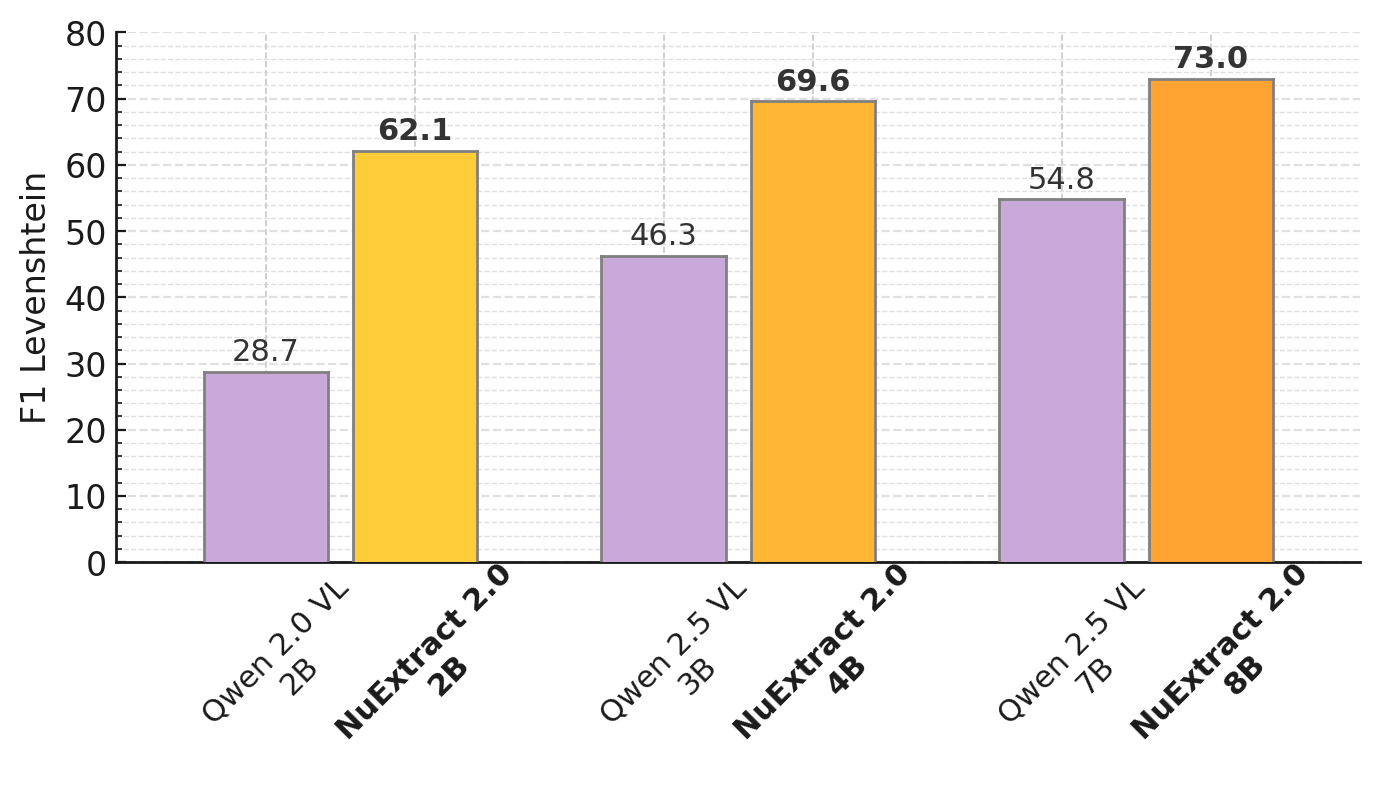

First off, let's look at the open-source models. Here we compare performance of base models with their corresponding NuExtract 2.0:

We can see that transforming generic models into information extraction specialists massively increases performance, with NuExtract 2.0 8B reaching 73 F-Score (a bit better than non-reasoning frontier models). We believe that these small specialized language models are perfectly suited for high-volume applications with resource-limited infrastructure.

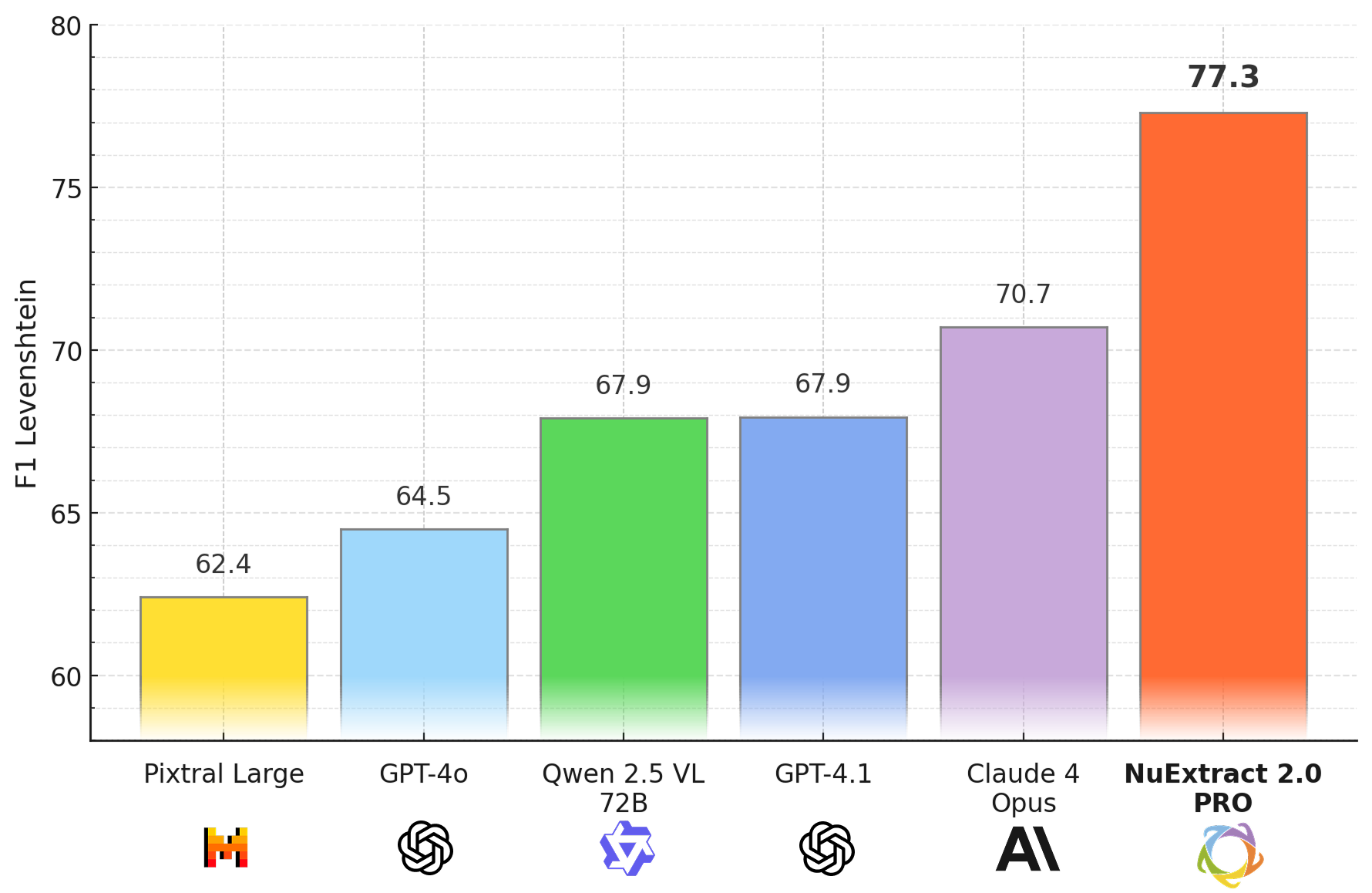

Let’s now look at the largest model, NuExtract 2.0 PRO, and compare it with the most popular non-reasoning frontier models:

We can see that NuExtract 2.0 PRO largely outperforms frontier models! This was quite a pleasant surprise to us, we had no idea if NuExtract would still bring significant improvement over generic models past a certain size.

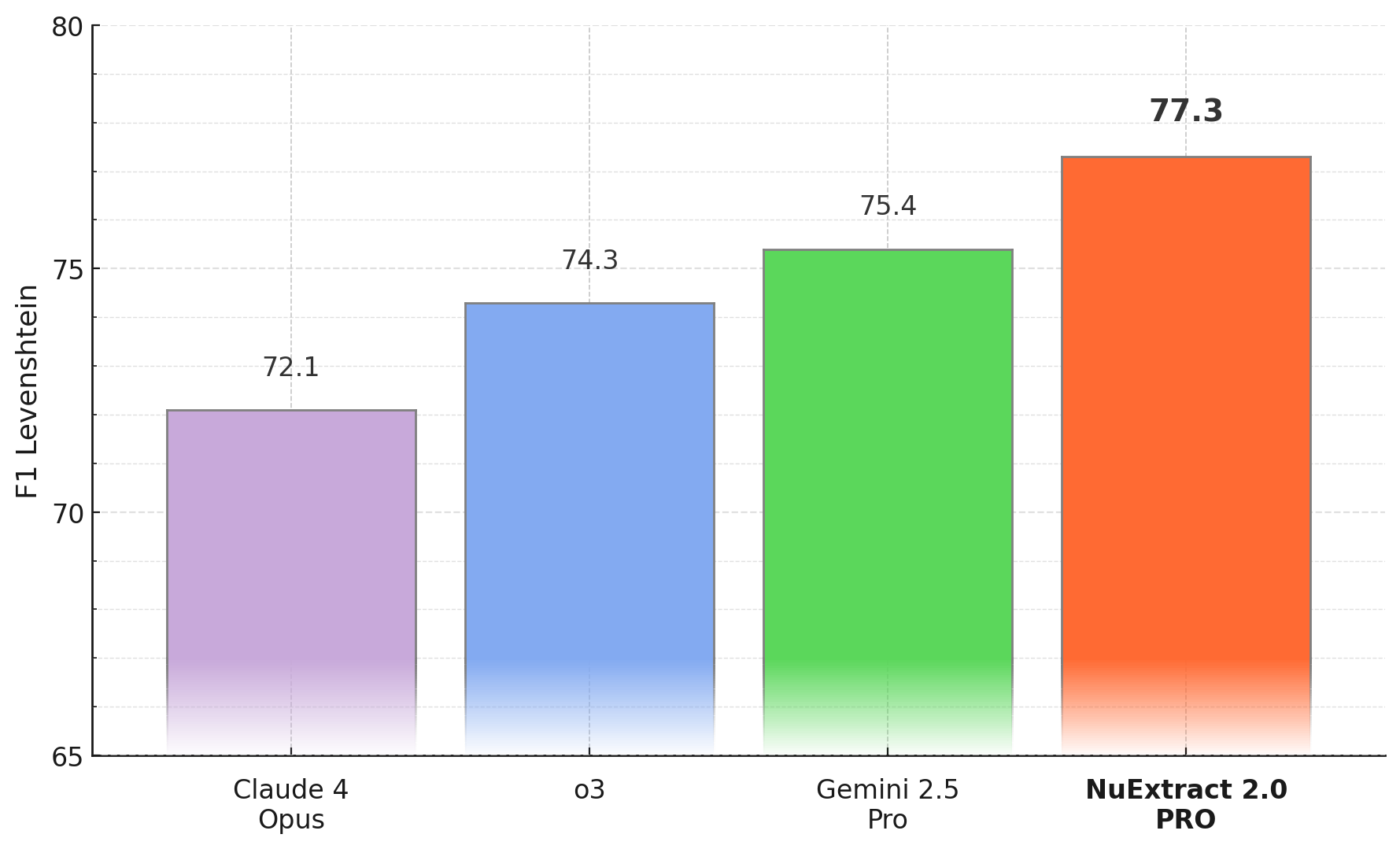

Now let's up the difficulty a bit and compare it to the latest reasoning LLMs. Indeed, NuExtract 2.0 PRO is a non-reasoning LLM: it directly returns an output given an input. This has the benefits of being faster and cheaper to use. Reasoning models first generate a bunch of "thought" tokens before answering. This slows down execution, but improves output quality. Can we compete with the latest and biggest reasoning models? Here is the performance comparison:

Surprisingly, NuExtract 2.0 PRO is also ahead of frontier reasoning models! The margins are smaller though. We can say that NuExtract 2.0 PRO is quite better than reasoning Claude 4 Opus (+5 F-Score), but only a bit better than Gemini 2.5 PRO (+2 F-Score). We should keep in mind that these reasoning models cost at least 10 times more than NuExtract 2.0 PRO to use on extraction tasks.

Still, these results show that reasoning is useful for information extraction, and that the next generation of NuExtract should probably have this ability. 🙂

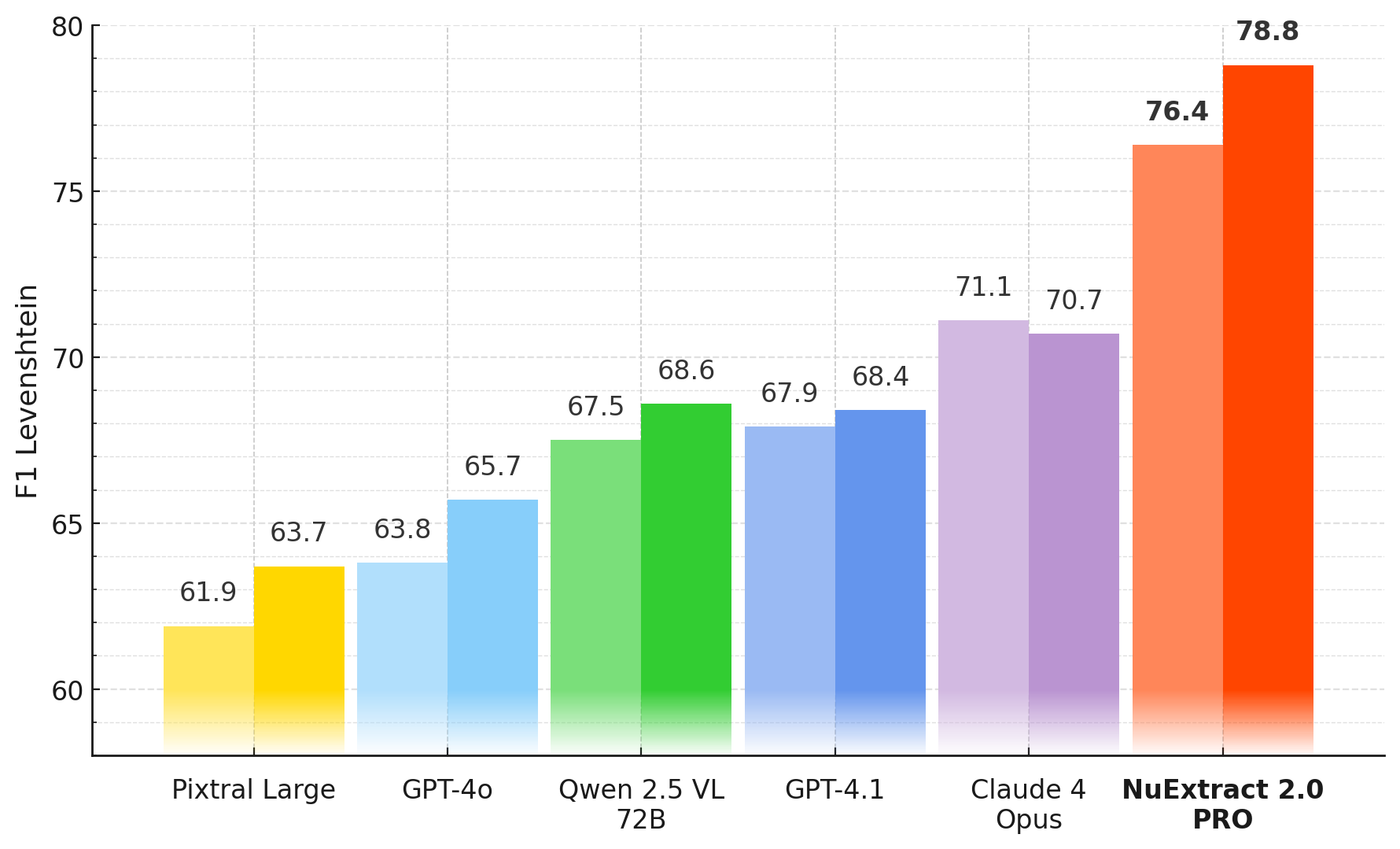

Another interesting thing to look at is the performance decomposed into recall and precision. A high recall means that your model correctly finds most information present in documents, but might also invent extra information (a.k.a. hallucinations, or false positives). A high precision means that you can trust the extracted information, but that some information might be missing (a.k.a. false negatives). Recall and precision are both important but, for the task of extracting structured information, precision is king: you prefer not having the information rather than polluting your database with wrong information.

Here is the recall (left bars) and precision (right bars) of NuExtract 2.0 PRO compared to non-reasoning frontier models:

We can see that NuExtract 2.0 PRO has a higher precision than recall, which is a good thing for information extraction. This precision-recall gap is in part due to the training procedure we use: we specifically teach NuExtract to say “I don’t know” (null output) when the requested information is not present in the document.

Ok, we see that NuExtract 2.0 is pretty good compared to other LLMs, but what is it bad at? We identified a few failure points that we will need to address.

The most obvious shortcoming of NuExtract 2.0 is its context size of 32k tokens. This corresponds to about 60 pages of text, or 20 pages of images, and naturally limits the maximum document size that can be processed. Increasing this limit is a priority for the next version.

That said, a large context size is not enough, and, in our tests, all LLMs tends to have issues with long-ish documents or outputs, especially when asked to extract long lists of things. A little experiment you can do: take all the content of the Wikipedia page about the largest cities, and ask an LLM to "find absolutely all the cities mentioned in the text". This is a very easy task in terms of language understanding, but NuExtract only finds 116 cities out of ~129 cities, o3 is worse, with 111 cities found, and GPT-4o only finds 80 of them! We believe it is due to difficulties in keeping track of what has already been extracted and what has not. We are working on fixing this problem, but this shows that the road to perfect extraction is not over.

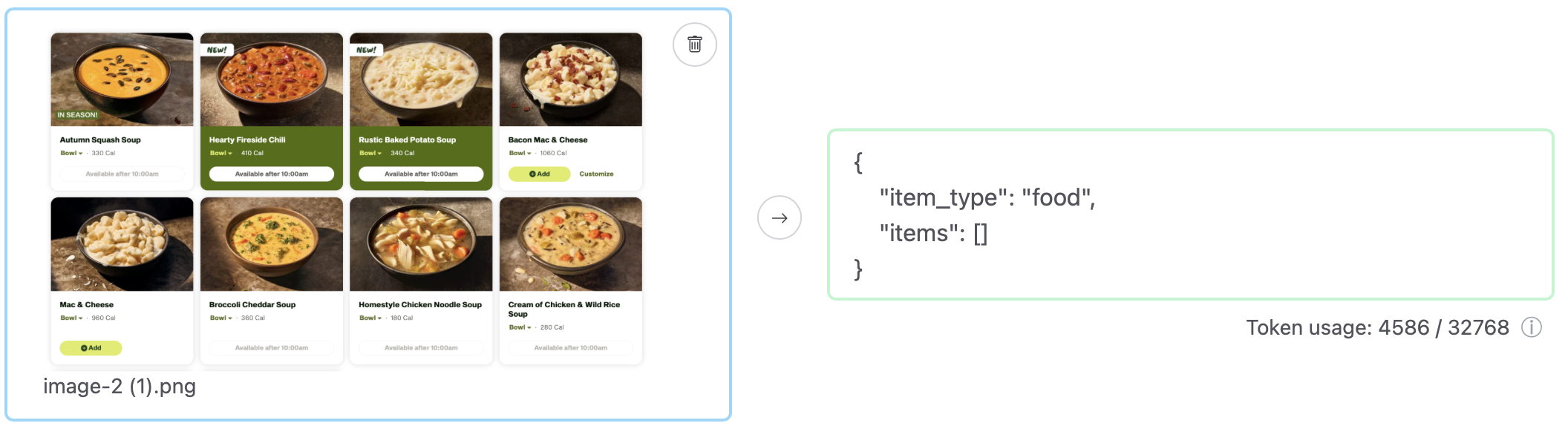

We identified instances of NuExtract 2.0 being lazy, in the sense that it returns an output with almost no information. We find that it can happen when a few things are true: the template is complex, there is a long list of objects to extract (table output), and a large part of the requested information is missing. Here is the worst case that we found; the list is not that long, but we ask for 6 missing properties (like price, ratings, number of reviews…):

If you encounter this behavior, we advise to either use a simpler template, split the template into smaller templates, or split the document. We should fix this issue on the platform soon (it will have to wait a bit longer for a fix in the open-source versions).

On some rare instances, we find that NuExtract 2.0 repeats the same element in a list over and over again. While very rare, this is a bad behavior that breaks output format. We find that this behavior mainly happens when asking to extract long lists from low-resolution images containing non-Latin characters. On the NuExtract Platform, we detect this behavior and correct it.

Like in the looping case, this is a pretty rare issue. It typically involves an integer that has a leading 0 such as 07 instead of 7. Or, more exotically, a number being expressed by a mathematical expression.

Again, we prevent such cases on the NuExtract platform (JSON output is guaranteed). If you use NuExtract out of the platform and want to be absolutely sure that your JSON is valid, we advise to use a post-processing library like jsonrepair. In principle, you could also use a guided-generation library, but it slows down generation and does not bring much additional value when combined with NuExtract.

Overall, we are pretty satisfied with this new version. NuExtract 2.0 is the highest-performing extraction LLM, it can be used for pretty much any extraction task, on any kind of document, and in any language. We believe this version will open up plenty of applications.

That said, the road is not over. This model still makes mistakes that seem fixable, and there are important features missing, such as an accurate estimation of extraction uncertainty, or the ability to process arbitrarily long documents. We are working on that, but in the meantime, we hope that you will make good use of this model! Also, as usual, we are all ears for feedback 😊