This post is about the NuExtract Platform — check the sister post about NuExtract 2.0.

Information extraction — sometimes called structured extraction — is the task of extracting information from an unstructured document (email, invoice, form, contract, and so on) into a structured output for a computer to use.

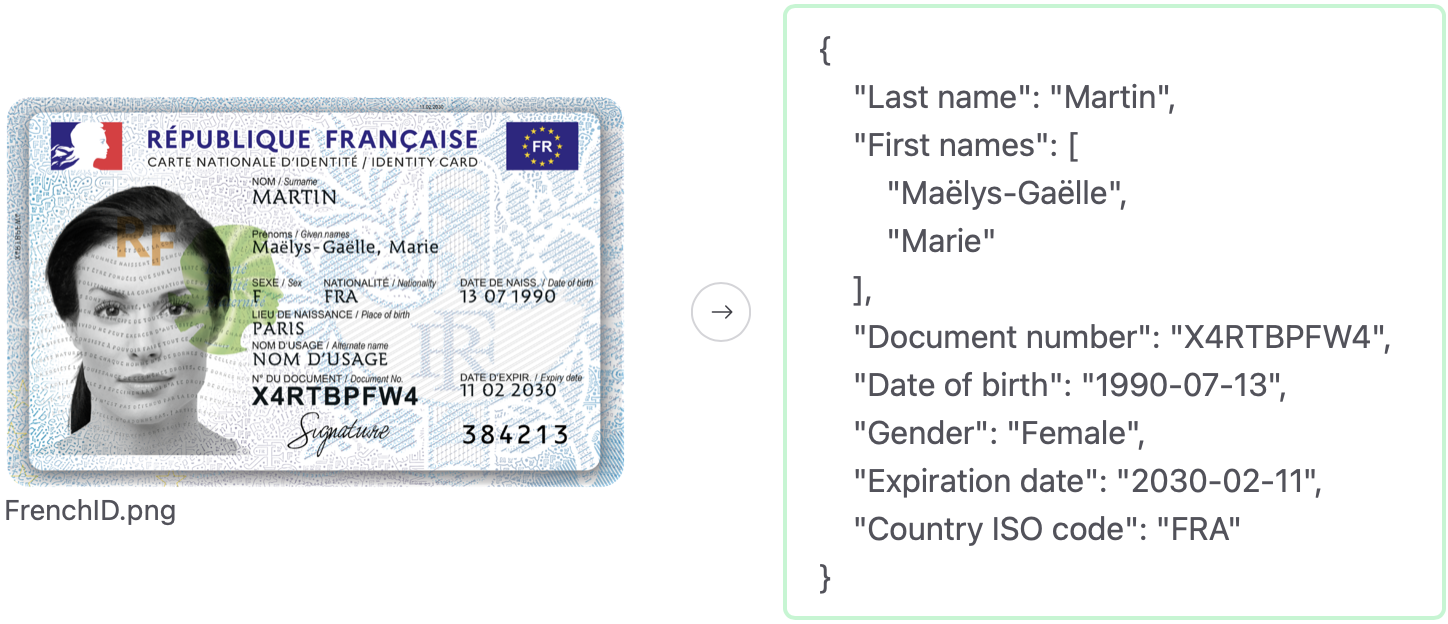

For example, let’s say that you need to verify online users. You would need to transform scans of their IDs into structured data:

The above JSON output can easily be handled by a computer.

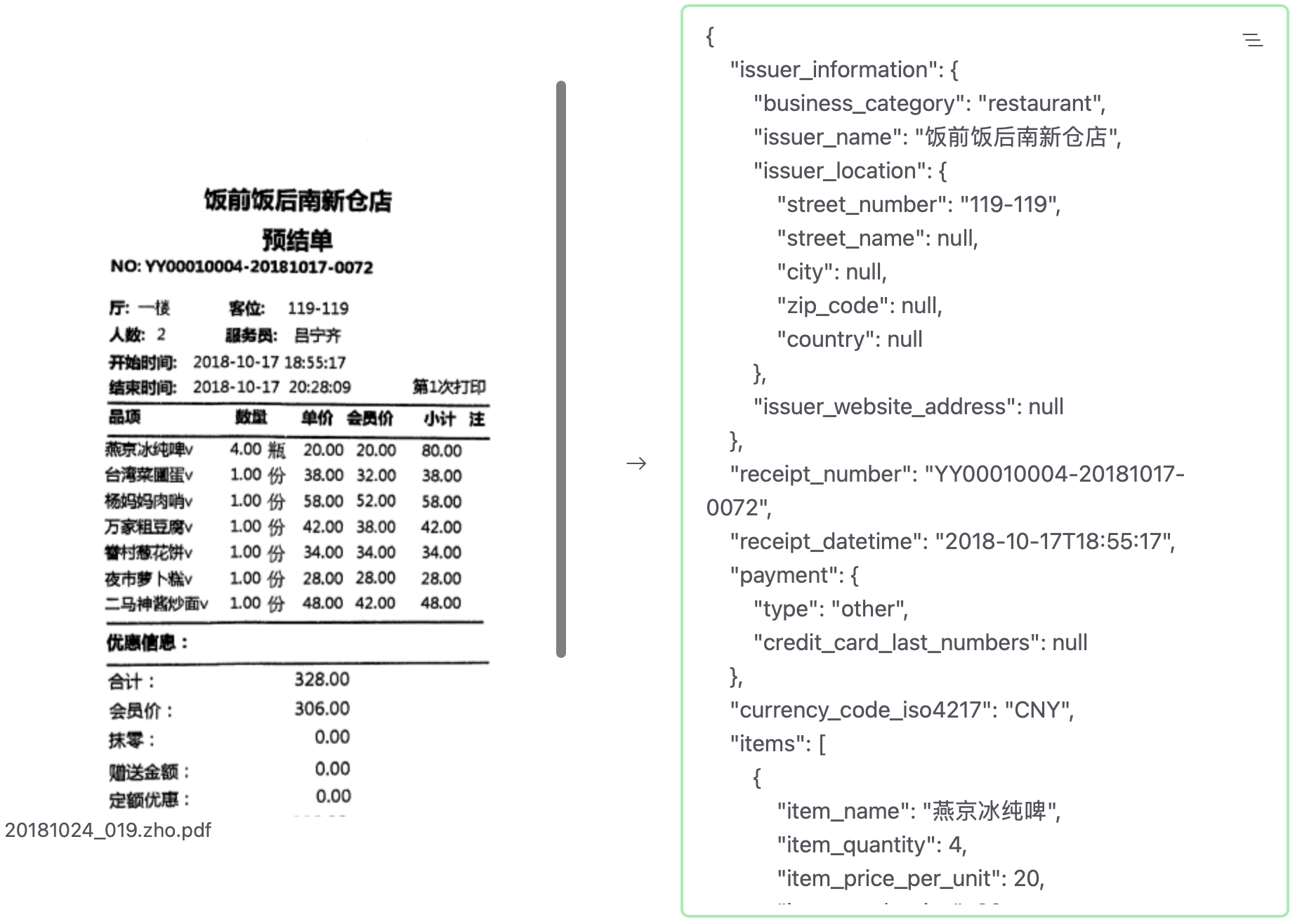

Similarly, you might need to extract quantities/prices from invoices or receipts:

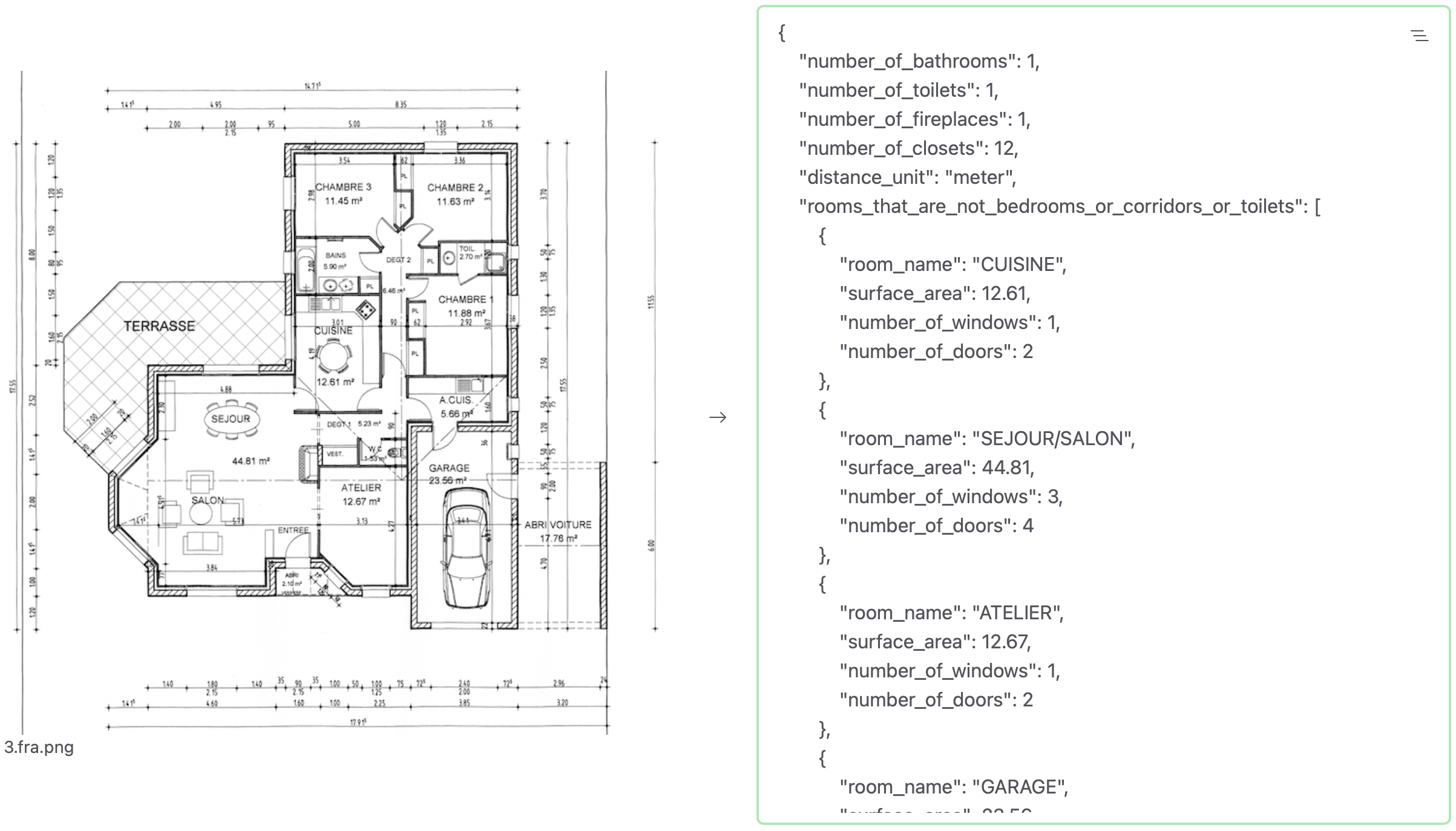

Or you might need to classify/extract information from technical documents such as this plan:

Information extraction is not just about processing scanned documents. More often than not, documents are just a regular PDFs, like this contract:

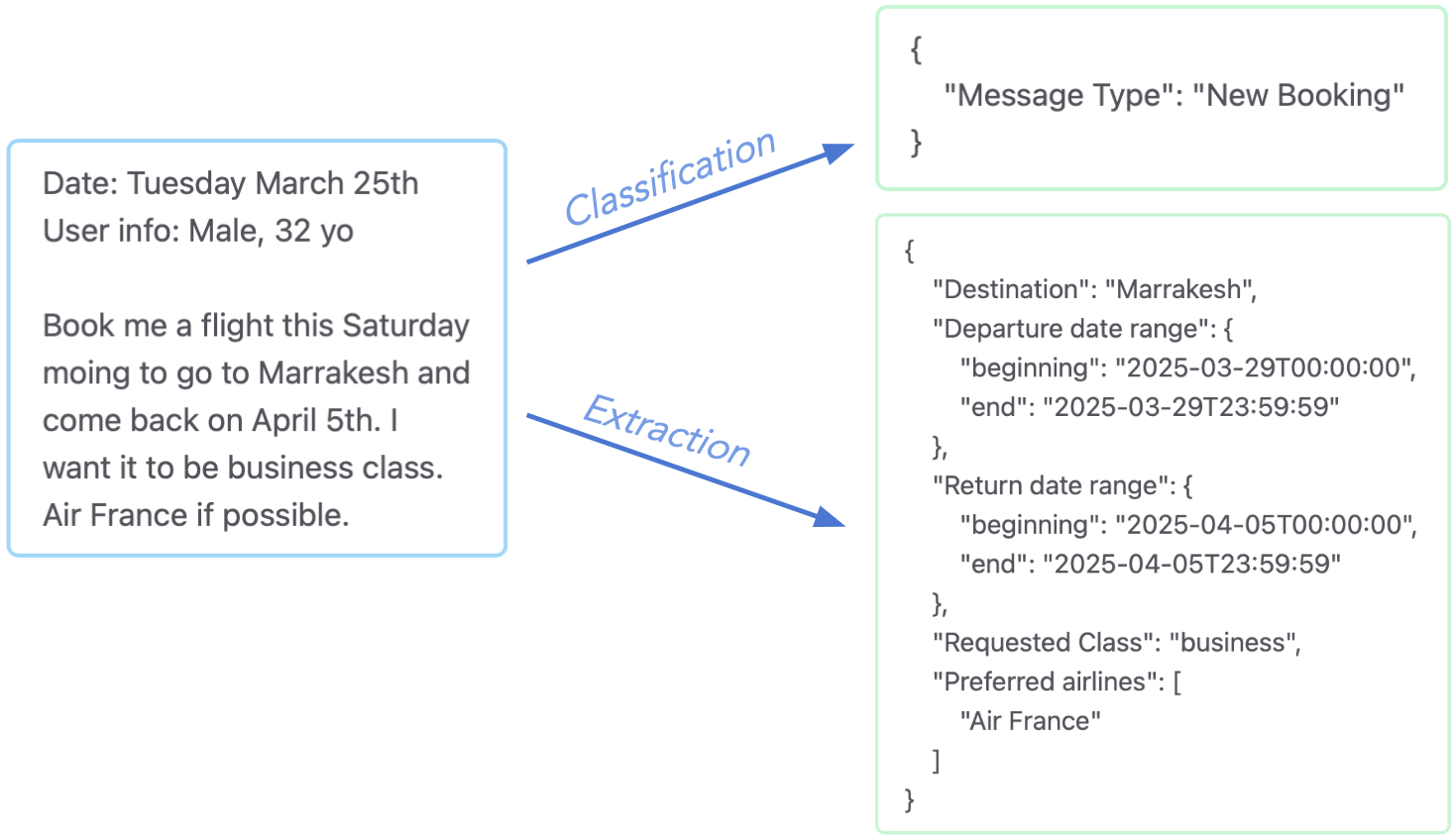

It can also be about classifying or extracting information from raw text documents, such as reports, emails, or customer messages:

If the input is a document and the output is a JSON, this is an information-extraction task.

Companies are flooded with unstructured documents; there are needs for information extraction about everywhere. Here are the main use cases that we encounter at NuMind, organized by industry:

If you work in such industry, chances are that you have information extraction needs as well!

The field of information extraction has a long history. From decades ago, and up until recently, extraction was tackled via heuristics, regex-like rules, shallow ML methods, traditional CR preprocessing, and a lot of human effort. These methods limited information extraction to simple tasks, with low-variability documents.

Large Language Models (LLMs) are changing the deal. Thanks to their language understanding, world knowledge, and ability to generate complex outputs, they solve extraction problems that were previously out-of-reach. Furthermore, as they continue improving, LLMs hold the promise of “solving” information extraction entirely, which means being able to perform any extraction task perfectly while only requiring minimal human input to define the task.

This promise is not satisfied yet — LLMs still make plenty of extraction mistakes — but we found a path to get there: We discovered about a year ago that it was possible to create specialized LLMs that were much better at extracting information than generalist LLMs. From this research, we created NuExtract, a line of LLMs specialized in extracting information. One interesting thing is that NuExtract models hallucinate less, as we managed to teach them to say “I don’t know” (null value, in JSON speak) when the requested information is not present in the document.

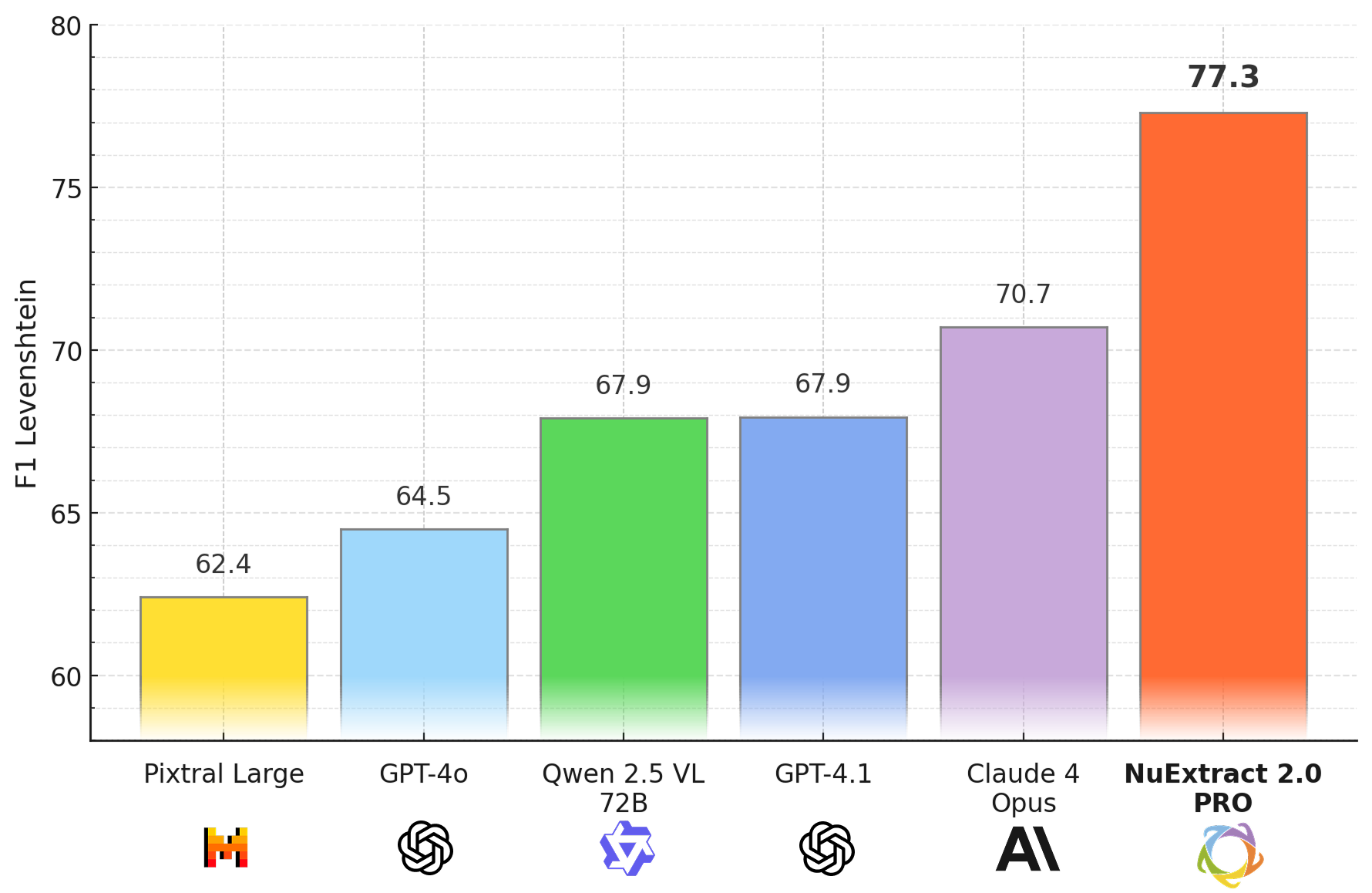

At first, NuExtract models were small — only a few billion parameters — and limited to text documents. We recently moved on to bigger models which can also process PDFs & scans via a vision module. The nice surprise is that performance gains that we see on small models are still present on big models! Our latest and biggest model to date, NuExtract 2.0 PRO, is simply outclassing non-reasoning frontier LLMs:

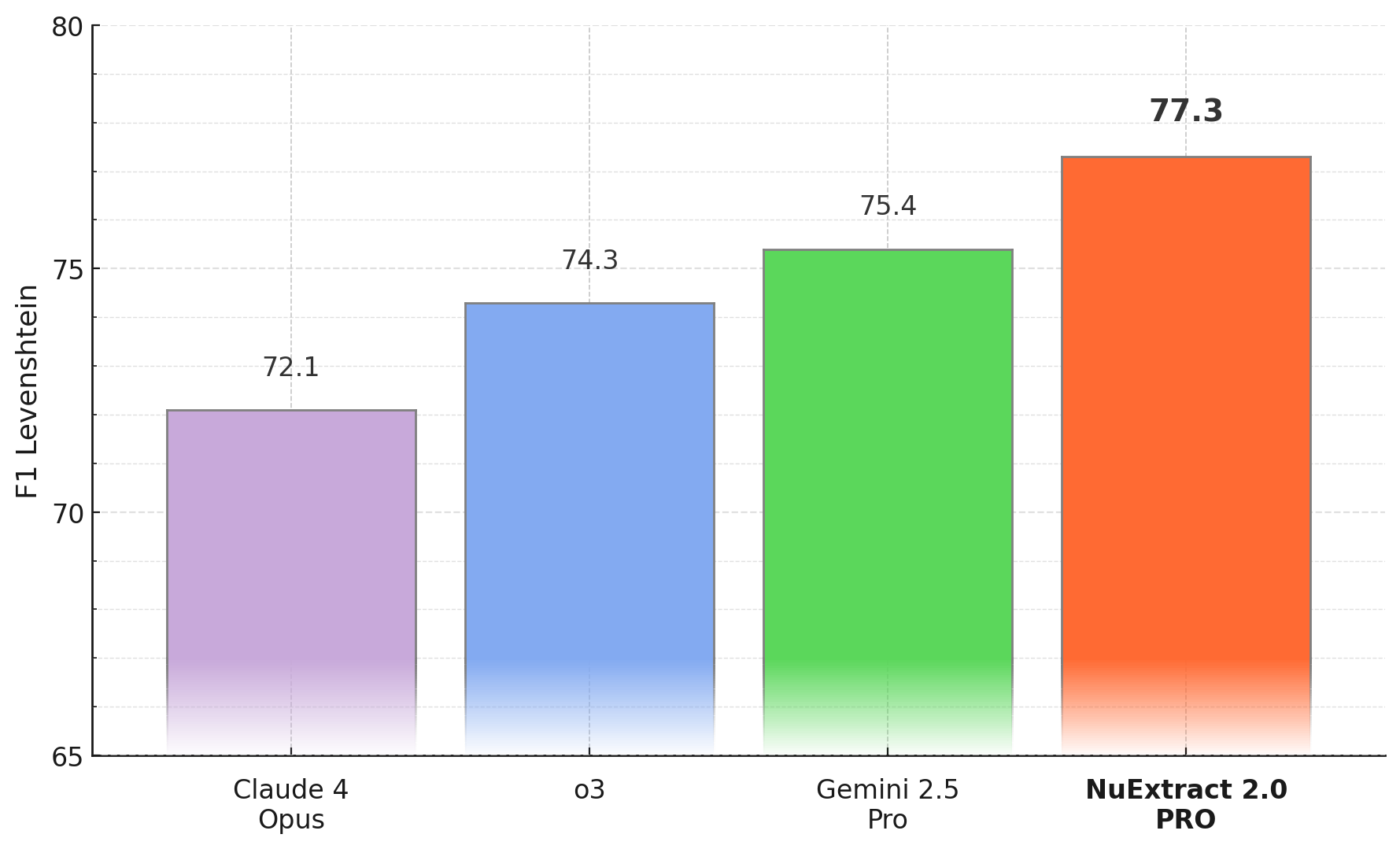

Even more surprising, NuExtract 2.0 PRO is also surpassing reasoning frontier models, while being faster and at least 10x cheaper to use:

These results motivated us to create the NuExtract platform, mostly to provide API access to NuExtract 2.0 PRO.

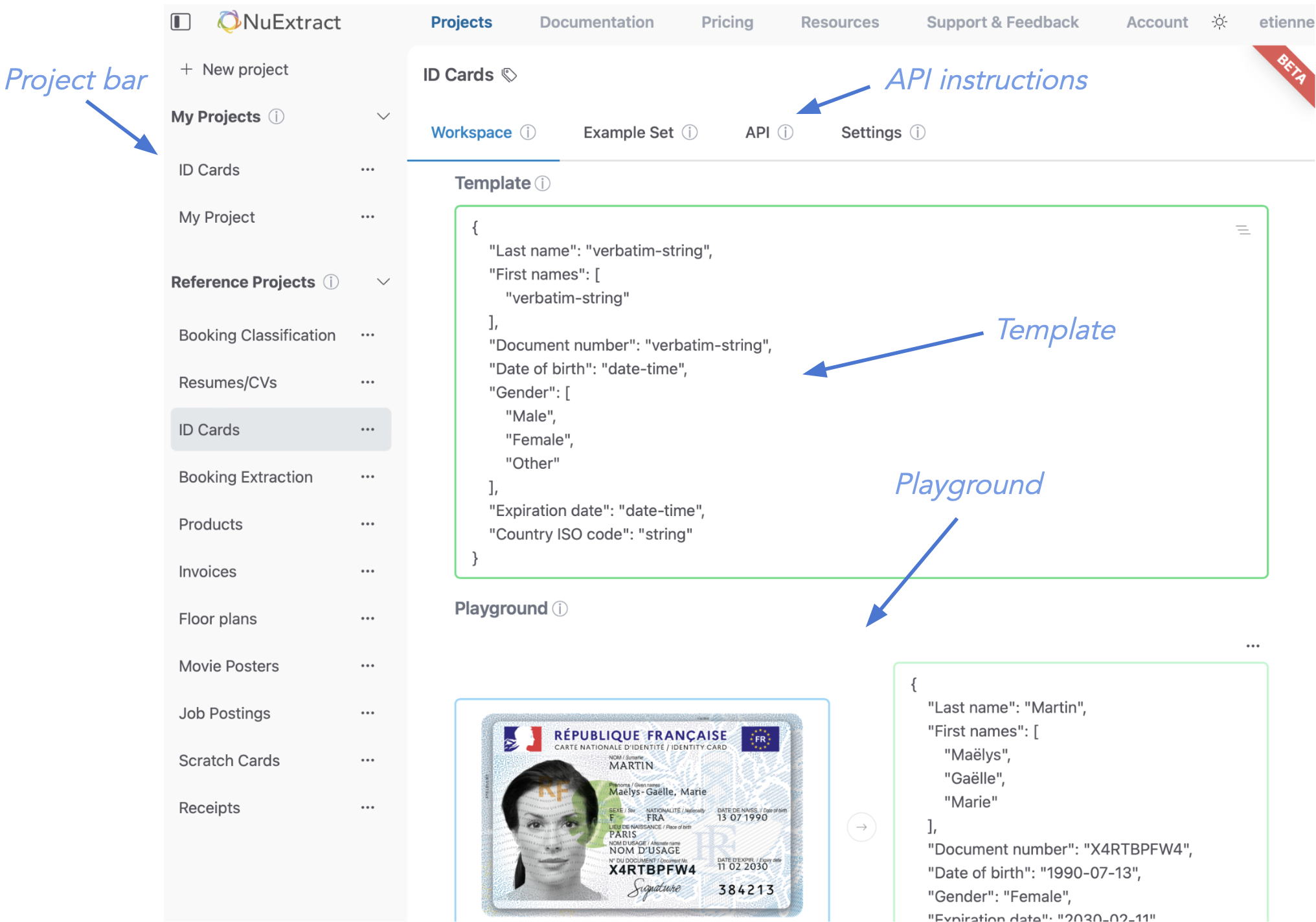

The NuExtract platform ended-up being much more than “just” providing API access to a model. For example, we included a pre-processing step to handle various document formats (PDFs, spreadsheets, scans), a post-processing to make sure the output is a valid JSON, and, importantly, a graphic interface to easily define extraction tasks and test the model. Here is what this interface looks like:

Note that this platform, like the model NuExtract 2.0 PRO, is multilingual.

Another important thing is that the NuExtract platform can be deployed privately, which is a requirement for companies having data privacy/confidentiality constraints. This is in part due to the fact that NuExtract 2.0 PRO, while being the biggest of the NuExtract models, still fits on one H100 GPU, making it practical for private use.

Overall, there are four main reasons for using the NuExtract Platform over alternative solutions:

You can use the entire platform via API if you want, and directly make extraction calls. However, the friendlier way to use the platform is to:

Let’s look at these steps further (and you can check the user guide for more details).

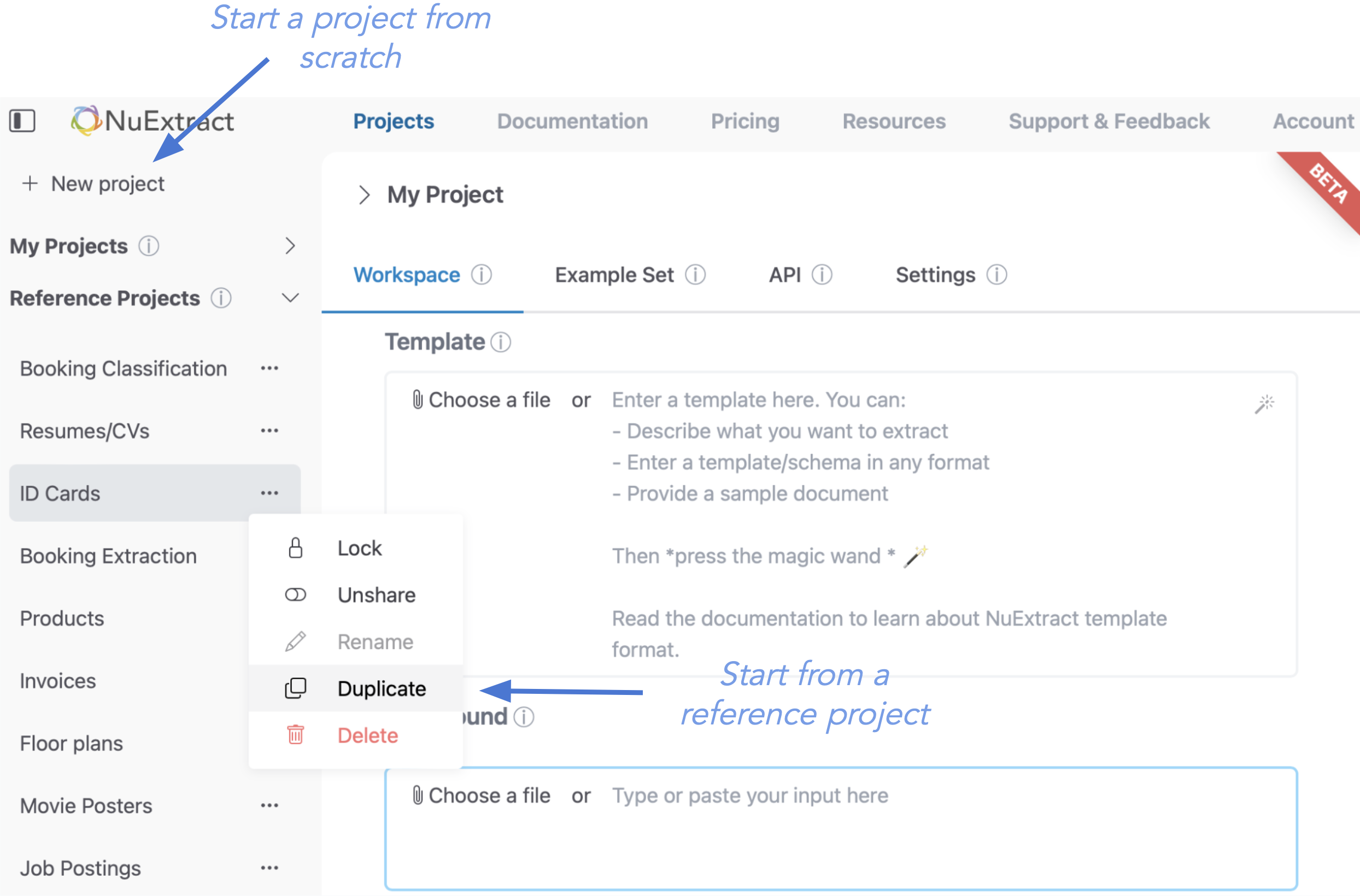

The very first step is to create a “project”. In the platform, a project corresponds to one specific extraction task, and each project has its own API endpoint to extract information from documents.

You can choose to start a project from scratch, or duplicate an existing “reference project”:

Each project has four tabs:

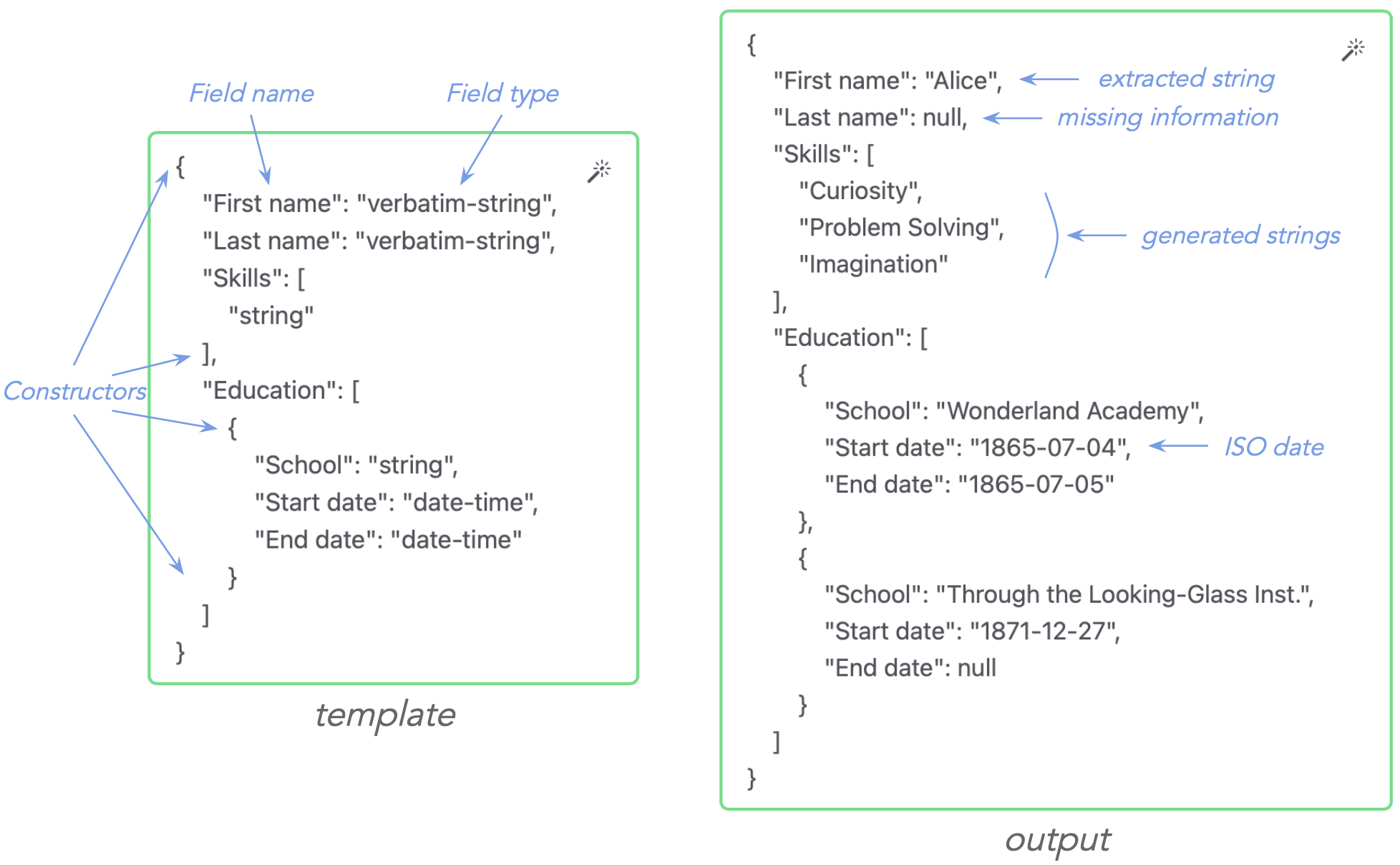

The next step is to create a template for your extraction task in the Workspace. The template defines what to extract and how the output should be structured — it is a hard constraint on the output. Importantly, the returned output always matches its corresponding template. Here is an example of template and compatible extraction output:

You can see named fields such as "first name" indicating what to extract, type specifications such as "verbatim-string" indicating types/formats that the extracted values should have, and constructors {…} (object) and […] (set) defining the output structure.

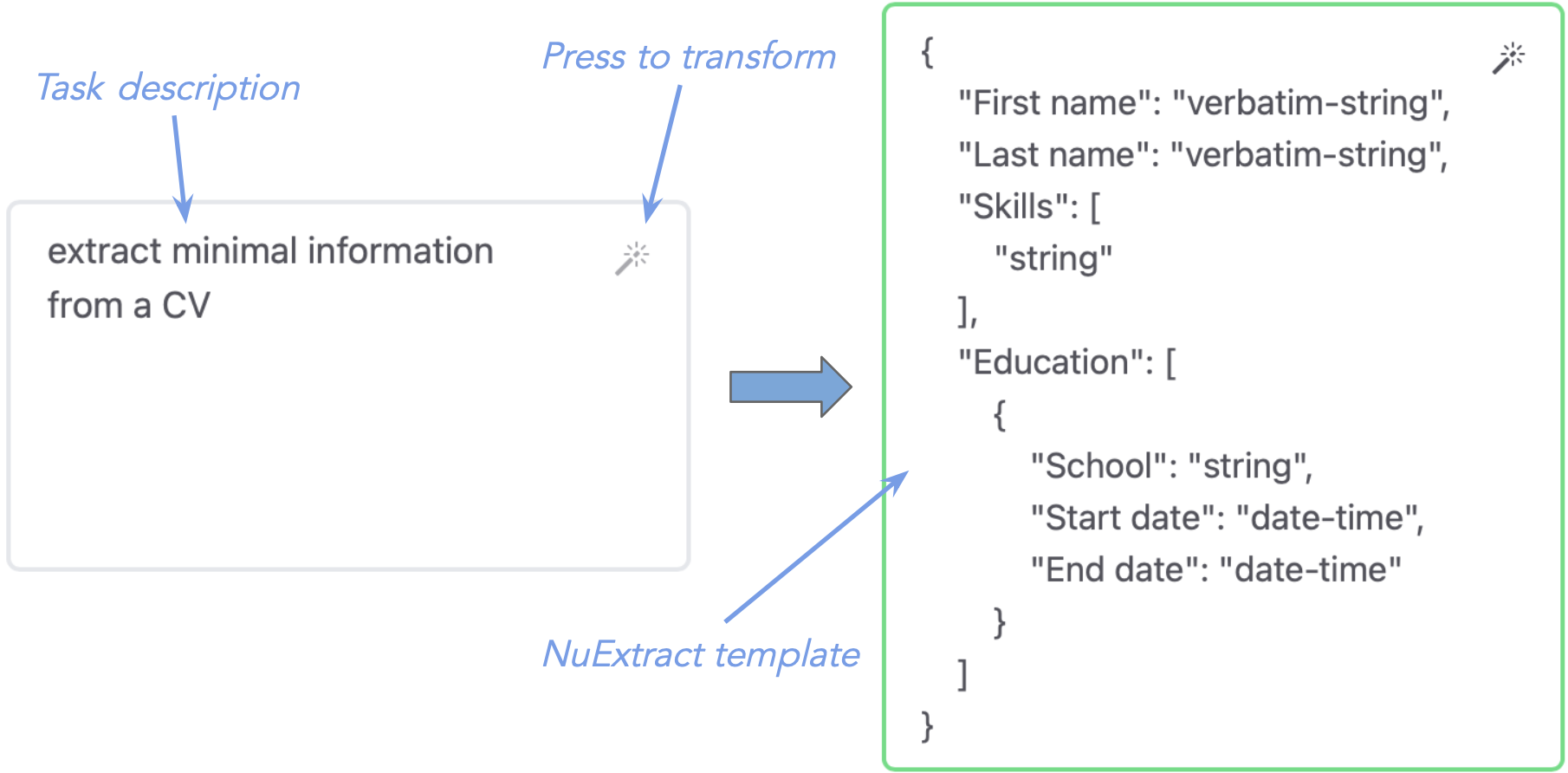

To create such a template, you can provide a description of the task, such as “extract minimal information from a CV”, and press the magic wand 🪄 to obtain a valid NuExtract template:

You can then modify the result to be exactly what you want by looking at the template format in the documentation.

Instead of a description, you can also provide a JSON schema, Pydantic code, or even a document — pressing the magic wand will turn whatever you provide into a NuExtract template.

Templates alone can be ambiguous; we sometimes gain to give NuExtract examples of our task. This can be done in the “Example Set” tab by providing input→output examples of correct extractions, such as:

NuExtract learns from such example to perform the task better. Even a unique example can improve performance substantially. It is generally a good idea to provide examples for which the model struggles.

These examples are added to the prompt of NuExtract (a.k.a. in-context learning), which means that, at the moment, the number of examples is limited by the context size of the model. We are working on a solution to allow for an arbitrary large number of examples.

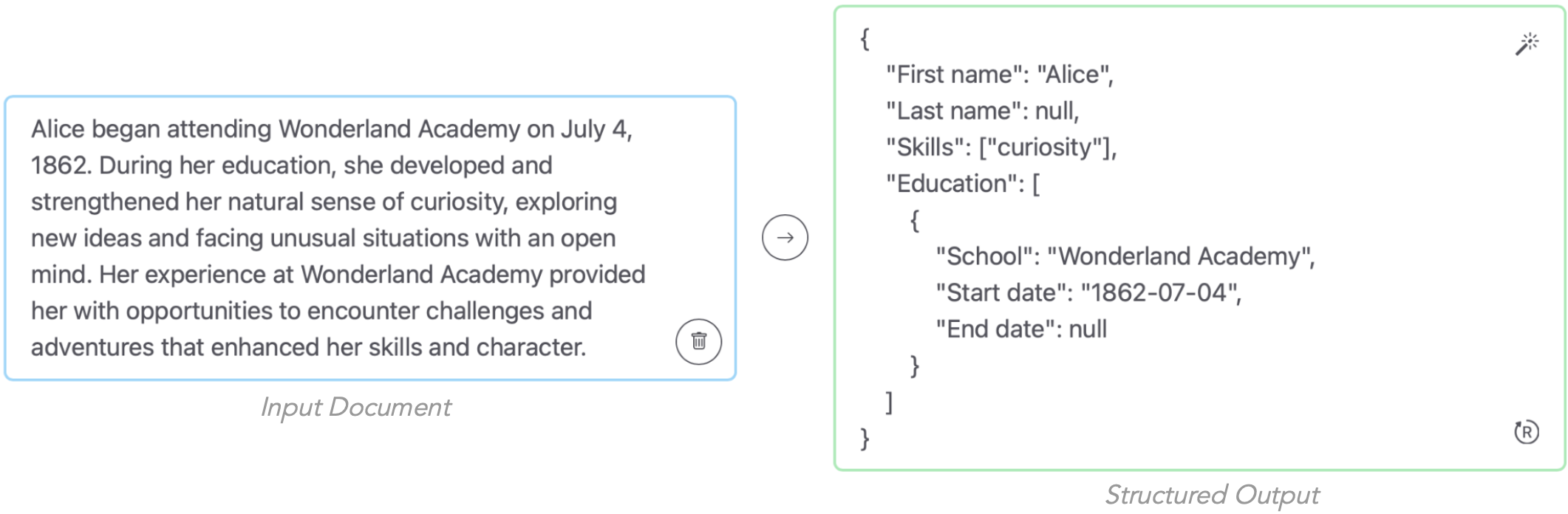

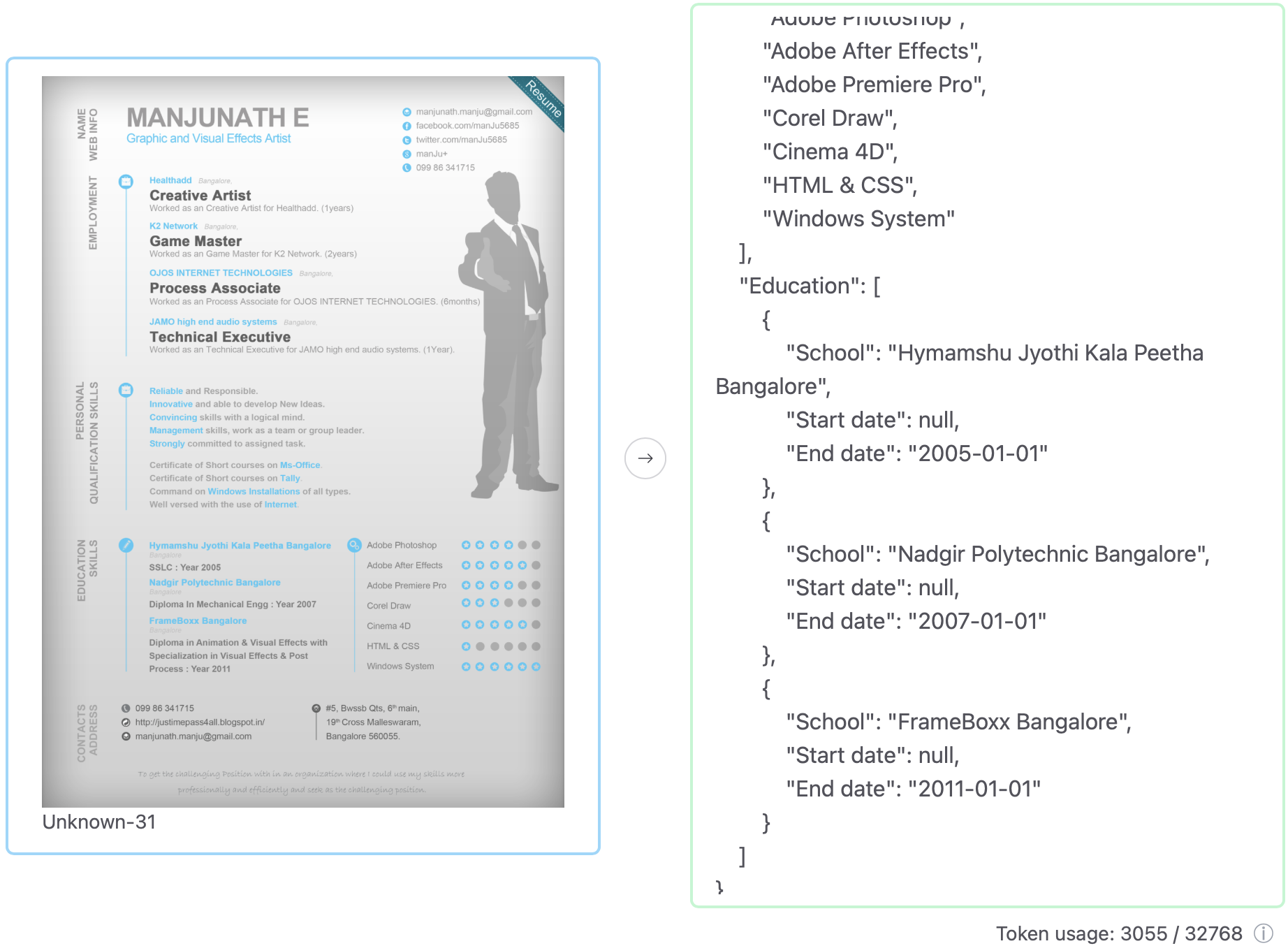

At any point during the definition of your task, you can test the model in the playground, either by typing/pasting text, or by uploading a document:

The extraction here seems correct, and you can see that some fields have null values. null is the way NuExtract expresses that it could not find or infer the requested information. Knowing when to return null is a strength of NuExtract.

The goal of the playground is generally to try to find extraction errors. When you managed to find such error, you can add the corresponding document and corrected extraction to the teaching examples, in order to correct the model.

Note that you can create multiple “playpods” to keep track of performance as you modify the template and teaching examples.

Once you are happy with how NuExtract behaves for your task, it is time to put it in production! To do so, there is one extraction API endpoint for each project:

https://nuextract.ai/api/projects/{projectId}/extract

You provide a text or a file, and it returns the extracted information according to the task defined in the project.

To use this endpoint, you need to create an API key and replace {projectId} by the project ID found in the API tab of the project. Let’s test it on a minimal text document:

API_KEY="your_api_key_here"; \

PROJECT_ID="87f22ce1-5c1d-4fa9-b2f1-9b594060845f"; \

curl "https://nuextract.ai/api/projects/$PROJECT_ID/extract" \

-X "POST" \

-H "Authorization: Bearer $API_KEY" \

-H "content-type: text/plain" \

-d "Alice began attending Wonderland Academy on July 4, 1862."The result is:

{"result":

{

"First name":"Alice",

"Last name":null,

"Skills":[],

"Education":[

{

"School":"Wonderland Academy",

"Start date":"1862-07-04",

"End date":null

}

]

},

"completionTokens":52,

"promptTokens":237,

"totalTokens":289,

"logprobs":-0.13810446072918126

}We can see that the extraction is correct and that that null has been used to represent missing information. We can also see the number of input and output tokens, and the total log probabilities of output tokens, which can help figuring out the confidence of the model in its extraction.

Similarly you can try this endpoint on a file document:

API_KEY="your_api_key_here"; \

PROJECT_ID="87f22ce1-5c1d-4fa9-b2f1-9b594060845f"; \

curl "https://nuextract.ai/api/projects/$PROJECT_ID/extract" \

-X "POST" \

-H "Authorization: Bearer $API_KEY" \

-H "content-type: application/octet-stream" \

--data-binary @file_name.extAnd you can also use the Python SDK to perform such extractions:

from numind import NuMind

from pathlib import Path

project_id="87f22ce1-5c1d-4fa9-b2f1-9b594060845f"

client = NuMind(api_key=api_key)

file_path = Path("document.odt")

with file_path.open("rb") as file:

input_file = file.read()

output_schema = client.post_api_projects_projectid_extract(project_id, input_file)You can find more information about the API in the API Reference and about the Python SDK in the SDK documentation.

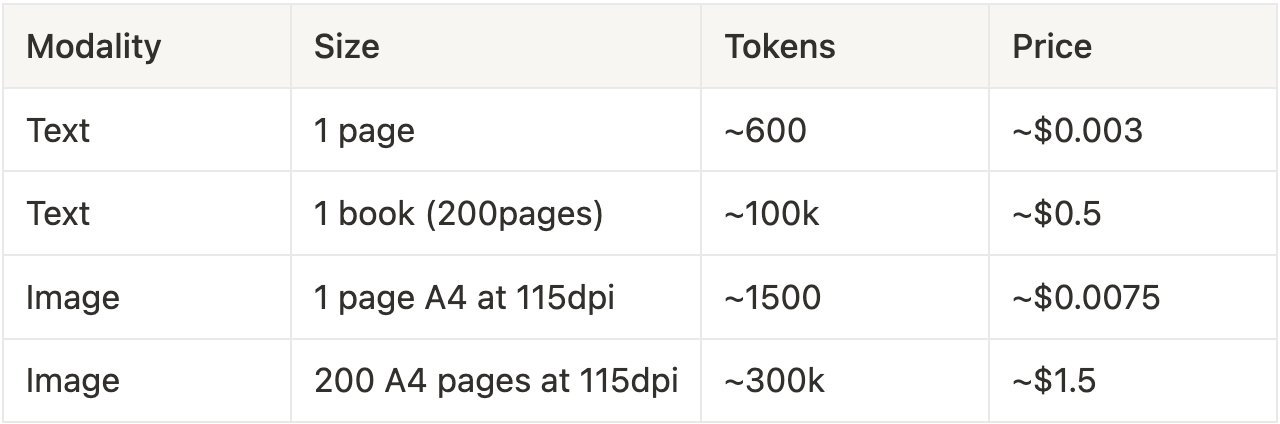

This platform follows a simple pricing model:

Note that, in this pricing, we are mixing input tokens (template, examples, and input document) and output tokens (tokens of the generated JSON). Generally, the majority of tokens originate from input documents.

To get an estimate of what this means for your documents, 1 word is about 1.3 tokens on average in English language, and, for image documents, one token corresponds to a patch of 28x28 pixels. Here is an estimation of input document prices:

We are working on including a smaller model, priced under the $1 per million tokens bar.

Note that if you need to process more than a few million pages a year, it might be worth considering a private NuExtract platform to reduce inference price (e.g. by batching documents or by using a fine-tuned model). Talk to us to know more about it.

Now, let’s talk a bit about the data you send to the NuExtract platform. This is an important topic since input documents might contain private/confidential information about persons and organizations. In a nutshell, we only keep what is needed for the platform to function, which means:

Production documents and their extracted information are deleted in a maximum of two weeks after being processed.

Also, importantly, we do not send anything to a third party. Documents are processed by our models on servers that we control. We do not send documents to external APIs or anything like that.

Finally, we do not train models on any document sent to the platform.

Now, we know these guarantees are not enough for everyone, which is why we also offer to host the NuExtract platform privately: on your private cloud, on your premises, or on a dedicated instance that we host for you. If this interests you — and until we make this private platform self served — you will have to talk to us about it 🙂.

NuExtract 2.0 PRO is the best at extracting information… but not perfect (yet) by any means! Also, the NuExtract platform is in its infancy, a lot to improve! Let’s look at some limitations of both the model and the platform, and what we plan to address them.

This is probably the biggest limitation of this platform. Because of the 32k-token context window of NuExtract 2.0 PRO, you are limited to about 60 pages of text, or 20 pages of images, which is not enough for some applications. We are working on a solution to fix this problem entirely. In the meantime, you can try to split the input document and merge information afterward.

Since teaching examples are included in the prompt, their size and number are also constrained by the 32k context size of the model. We figure out ways to bypass this limit, but it will have to wait a bit more before being released.

The last main limitation is probably the inability to express subtleties about your task that are not easily expressed in the templates, and which would require to many examples for NuExtract to “get it”. This should be relatively easy to fix. In the meantime, one trick to bypass this limitation is to add “feature fields” to guide the model. For example, to classify a resume as relevant or not, you might include fields like “Has candidate a business degree?”.

Besides these obvious limitations, there are plenty of new features waiting to be implemented. For example:

We are working on all of these, and we need all the feedback we can get to debug, prioritize, and make design choices. Do not hesitate to let us know what you think 🙂.

That’s it for this post! We are thrilled to be working on this project and hope that it will useful to many of you, give it a try! 🚀